Demoting, deplatforming and replatforming COVID-19 misinformation

Team Members

Emillie de Keulenaar, Ivan Kisjes, Anthony Burton, Jasper van der Heide, Dieuwertje Luitse, Eleonora Cappuccio, Guilherme Appolinário, Narzanin Massoumi, Tom Mills, Amy Harris, Jörn PreußContents

- Team Members

- Contents

- Summary of key findings

- 1. Introduction

- 2. Research Questions

- 3. Initial datasets

- 4. Research questions

- 5. Methodology

- 5.1. Close reading YouTube ’s content moderation techniques (2013-2020)

- 5.2. Assessing YouTube ’s content moderation of COVID misinformation: demotion

- 5.3. Assessing YouTube ’s content moderation of COVID misinformation: deplatforming

- 5.4. Mapping the “replatformisation” of deplatformed contents: the case of Plandemic

- 5.5. Comparing content moderation of COVID misinformation with the content moderation of hate speech on YouTube

- 6. Findings

-

- 6.2.3. Conversely, specific information, such as falsehoods on COVID-19 transmission, prevention, diagnostics and treatment, is more easily defined and detected, and thus removed

- 6.2.4. YouTube -stated motives for banning videos reflect the platform’s difficulty in defining and detecting “borderline information” over hate speech

- 6.3. YouTube has had a significant role in the user engagement and circulation of the Plandemic video across the web

-

- 7. Discussion

- 8. Concluding summary

- 9. References

Summary of key findings

One of our key findings are that deplatforming COVID misinformation, in particular a controversial documentary by the name of Plandemic, has decentralised its access into various alternative access points (Bitchute, Internet Archive, banned.video and ugetube), dispersing engagement numbers. The other is that demotion of COVID misinformation (or “borderline content”) is not always effective in keeping complex conspiracies (e.g., QAnon and the New World Order) out of view. Finally, we also found that hate speech policies have decreased mysogynous and racist extreme speech out dramatically, though antisemitic language remains somewhat present.

1. Introduction

The origin, treatments and prevention for COVID-19 are facts that even authoritative sources — to name government and public health organisations — have not always been certain about. Speculations akin to conspiracy or “alternative” theories of the virus have complemented public uncertainty, providing citizens with a sense of reality where there is little. Since January 2020, claims that the virus is for example an American or Chinese bioweapon, a secret plan by Bill Gates to implant trackable microchips or a side effect of 5G radiations have gained popularity on Facebook, YouTube, Twitter and Instagram networks (Fisher, 2020; Goodman & Flora Carmichael, 2020; Uscinski, 2020). Their banality on social media was allegedly such that the World Health Organization spoke of a “massive infodemic” (2020); noting, in passage, that they were counting on social media platforms to track, report and debunk COVID-19 misinformation.

Given their tendency to complement public uncertainty, conspiracies have become challenging for content moderation techniques to suppress. Actively moderated platforms (Facebook, Twitter, YouTube, Reddit, Instagram) occasionally end up becoming a subject of the misinformation they seek to shut down. Users’ resistance to content moderation is indicative of the persistence of deplatformed contents in, for example, alternative or “alt-tech” copies of actively moderated platforms. When deletion or “deplatforming” of problematic contents occur, they can make their way to various alternative platforms, such as Gab, Parler, Bitchute, Banned, Ugetube and even the Internet Archive.

As a content moderation technique, “deplatforming” has earned a higher degree of interest among journalistic and scholarly research (e.g. Hern, 2018; Alexander, 2020a). Active moderation marks a clear rupture from the earlier ethos of Facebook, Twitter, and YouTube as open, free or participatory alternatives to mass media (Langlois, 2013). Considering that figures like Milo Yiannopolous or Alex Jones “mellowed” their hitherto “extreme” political language and that their audience has thinned (Rogers, 2020), studies have also given reason to believe that deplatforming is an effective strategy for policing problematic information (Chandrasekharan et al., 2017).

However, reflections on the effectiveness (and ethics) of deplatforming have yet to measure the effects of the migration of deleted COVID-19 misinformation alternative platforms. In a recent study, Rogers maps an “alternative social media ecosystem” (2020) by tracing the migration of a group of extreme political communities to Telegram and BitChute, and finds that these celebrities have effectively lost following. Still, the New York Times (2020) reports that high user engagement in controversial (mis)information on COVID-19 (such as the Plandemic documentary) may pose new challenges for content moderation and contribute to altering the relationship between mainstream platforms and alternative social media ecosystems.

This particular project can be seen as a follow-up of Rogers’ study on the effectiveness of deplatforming, in that it also asks whether deplatforming has successfully reduced engagement in problematic COVID misinformation (including the Plandemic documentary). Although it is unlikely that alternative platform ecologies effectively compete with mainstream platforms (Roose, 2017), we hypothesise that they nonetheless constitute a substantial alternative media diet for users in mainstream social media platforms, and that they work not in isolation but in tandem with mainstream platforms as co-dependent “milieus” (Deleuze & Guattari, 1987, p. 313).

This project also assesses the impact of additional content moderation techniques, such as demotion (“burying down” problematic or “borderline” contents search and recommendation results). Combined with its study of deplatforming, it tries to make visible back-end manipulations whose implementation, effect and policies are notoriously obscure (Caplan, 2018; Roberts, 2018; Bruns, Bechmann & Burgess, 2018; Gorwa, Binns & Katzenbach, 2020; Rogers, 2020; Gillespie, 2020).

This implies first understanding the rationale and implementation of both content moderation techniques by close-reading content moderation policies. Focusing on YouTube alone, we use the Wayback Machine to trace changes in anti-misinformation policies, take note of the information YouTube labels as undesired or problematic (and why), and how they are dealt with. For demotions, we capture the search rankings of results for COVID misinformation queries over time. For deplatforming, we verify the availability or status of the same search results, as well as specific reasons on why they have been taken down (e.g., “This video has been removed due to hate speech”). We then capture contents that have been replatformed in known alt-tech websites (Bitchute, Ugetube, Banned) or results for deplatformed videos queried in DuckDuckGo and Bing from March to June 2020.

2. Research Questions

-

How have YouTube ’s content moderation policies adapted to the spread and evolution of COVID-19 misinformation, particularly conspiracy theories?

-

Given high user engagement rates for COVID conspiracy theories, how effective have YouTube moderation techniques been in demoting and deplatforming problematic contents?

-

Given the recent termination of around 25,000 channels for hate speech violations (Alexander, 2020a), how do YouTube ’s anti-misinformation and hate speech moderation efforts compare?

3. Initial datasets

The objective of this project was to assess the impact of demoting and deplatforming COVID-19 misinformation on YouTube. To do so, we needed to understand how these two content moderation techniques functioned; what kinds of problematic information they are after; and where that information goes to when it is deleted.

This is why we relied on three groups of data:

-

YouTube content moderation policies about misinformation, collected with the Wayback Machine;

-

Misinformation, defined here as results for various COVID conspiracy queries;

-

Videos that have been taken down by YouTube that are listed in Bitchute, Duck Duck Go and Bing.

Due to the propensity of conspiracy videos to be platform-moderated, these datasets have been iteratively collected between March and July 2020 to ensure that no deleted videos were lost.

3.1. Internet Archive – WayBack Machine

We used the Wayback Machine to annotate significant changes in two to three YouTube content moderation policies related to misinformation: (1) COVID-19 medical misinformation (“COVID-19 Medical Misinformation,” 2020) and (2) policies used to counter spam, deceptive practices and scams (“Spam, deceptive practices & scams,” 2020). Because this project took place in a period of high-profile moderation of hate (and violent) speech, we also judged it worthwhile to examine (3) YouTube ’s anti-hate speech policies (“Hate speech policy,” 2019).

Policy changes, including on the types of content moderation techniques listed, were located and annotated using the Wayback Machine’s Changes tool (Internet Archive, 2020). We retrieved screenshots for each Wayback Machine URL showing significant policy changes using the DMI Screenshot Generator tool (2018).

3.2. YouTube: demotion and deplatforming

To obtain COVID-19 misinformation, we queried videos related to several COVID conspiracies. These queries were extracted from both news media and messaging boards heavily invested in such conspiracies, to name as 4chan/pol. To avoid collecting false positives, we sought to collect vernacular expressions of conspiracies usually unknown or unreported by the news media (e.g., “5gee” instead of 5G). Every video content and metadata was collected with YouTube DL.

Queries were divided into 13 categories (Table 1). Resulting datasets included metadata on YouTube channels, video ids, URLs, comments, video transcripts, views, likes and dislikes, search rankings, banned videos and banned status (e.g., “This video has been removed due to copyright.”).

| Category of Queries | Queries |

| Qanon | q; qanon; cabal; trust the plan; the storm; great awakening; wwg1wa; wwg1waworldwide; TheStormIsHere; TheGreatAwakening; the storm |

| China Bioweapon | bioweapon chin; Wuhan Institute of Virology; chinese lab; bioweapon; Chin* OR Wuhan Institute of Virology; chinese lab |

| American Bioweapon | bioweapon; americ*; Military World Games; bioweapon AND (Ameirc* OR "Military World Games") |

| New World Order | "new world order"; NWO; "FEMA camp*; "martial law"; "agenda 21"; depopulation; new world order" OR NWO OR "FEMA camp*" OR "martial law" OR "agenda 21" OR depopulation |

| 5G | 5G; radiation; sickness; poisoning AND "5G" AND (radiation OR sickness OR poisoning) |

| Bill Gates | "Bill Gates"; "Gates Foundation"; "event 201" AND ("Bill Gates" OR "Gates Foundation" OR "event 201") |

| Hoax | hysteri*; hoax; sabotage; paranoi*; (hysteri* OR hoax OR sabotage OR paranoi*) |

| FilmYourHospital | #FilmYourHospital |

| Big Pharma | "big pharma" AND (corona* OR COVID*) |

| Anti Vaccination | (corona* OR COVID*) AND (informedconsent | parentalrights | researchbeforeyouregret | religiousfreedom | visforvaccine | healthchoice | readtheinsert | healthfreedom | deathtovaccines2020 | vis4vaccines | medicalrights | believemothers | medicalfreedomofchoice | childrenshealth | protruth | medicalexemption | vaccinationchoice | betweenmeandmydoctor | dotheresearch | learntherisk | freedomkeepers | vaccineinjury) |

| Chinese Cover up | covid OR corona AND chin cover |

| Other | plandemic |

Table 1. Query design for data collection on YouTube.

For additional findings on hate speech content moderation, we used a dataset of 1500 English-speaking political channels collected between November and April of 2018 by Dimitri Tokmetsis, political extremism reporter at de Correspondent (Tokmetzis, 2019). Metadata includes channel IDs, names, language, country, views, comments, subscription, descriptions, political orientation (summarised as “left-wing” or “right-wing”) and their status before and after the end of 2018 (available, suspended and motives for suspension).

3.3. Replatforming

3.3.1. BitChute and DuckDuckGo

For every video that was classified as deleted or unavailable on YouTube, we used Bitchute’s, DuckDuckGo ’s and Bing’s APIs to obtain (a) re-uploaded videos; or, in the case of DuckDuckGo and Bing, (b) search results for every deleted video. Every search comprises the name of the deleted video and its top 50 results.

3.3.2. Replatforming Plandemic

We traced the re-uploads or “replatformisation” of a specific piece of misinformation: the documentary Plandemic (2020). We collected copies, comments or and video commentaries on Bitchute, Ugetube and Banned. We also collected comments mentioning the word “plandemic” on major social media platforms (Facebook, Instagram and Reddit) so as to measure user engagement for the documentary, regardless of its online status.

3.3.2.1. Plandemic on Facebook and Instagram

Facebook data consisted of posts from 262 pages and 132 groups with over 1,000 members involved in COVID conspiracy discussions. We searched and collected groups and pages based on the 13 above-mentioned categories of queries on Crowdtangle. Data was collected between end of March and July, 2020.

Similar to Facebook, Instagram data consisted of accounts involved in discussions of COVID conspiracies. We searched these accounts with every above-mentioned query. Accounts were retrieved with Crowdtangle. Data was collected between end of March and July, 2020.

3.3.5.2. Plandemic on Reddit, 4chan and Gab

We collected Reddit comments that contained the word “plandemic” using Pushshift (Baumgartner et al., 2020). Data was collected on June 30th, 2020.

4chan posts were collected from the “politically incorrect” board, /pol/, where conspiracy discussions are frequently held. We collected every post that mentioned the “plandemic” using 4CAT (Peeters and Hagen, 2019) on June 30th, 2020.

We collected Gab posts that mentioned the word “plandemic” using Pushshift on June 30th, 2020.

3.3.8.3. Plandemic on Twitter

We collected tweets that mentioned the word "plandemic" from 2020-01-01 by using the Twitter Search API and web scraping. All tweets were retrieved on July 3rd, 2020.

4. Research questions

5. Methodology

5.1. Close reading YouTube ’s content moderation techniques (2013-2020)

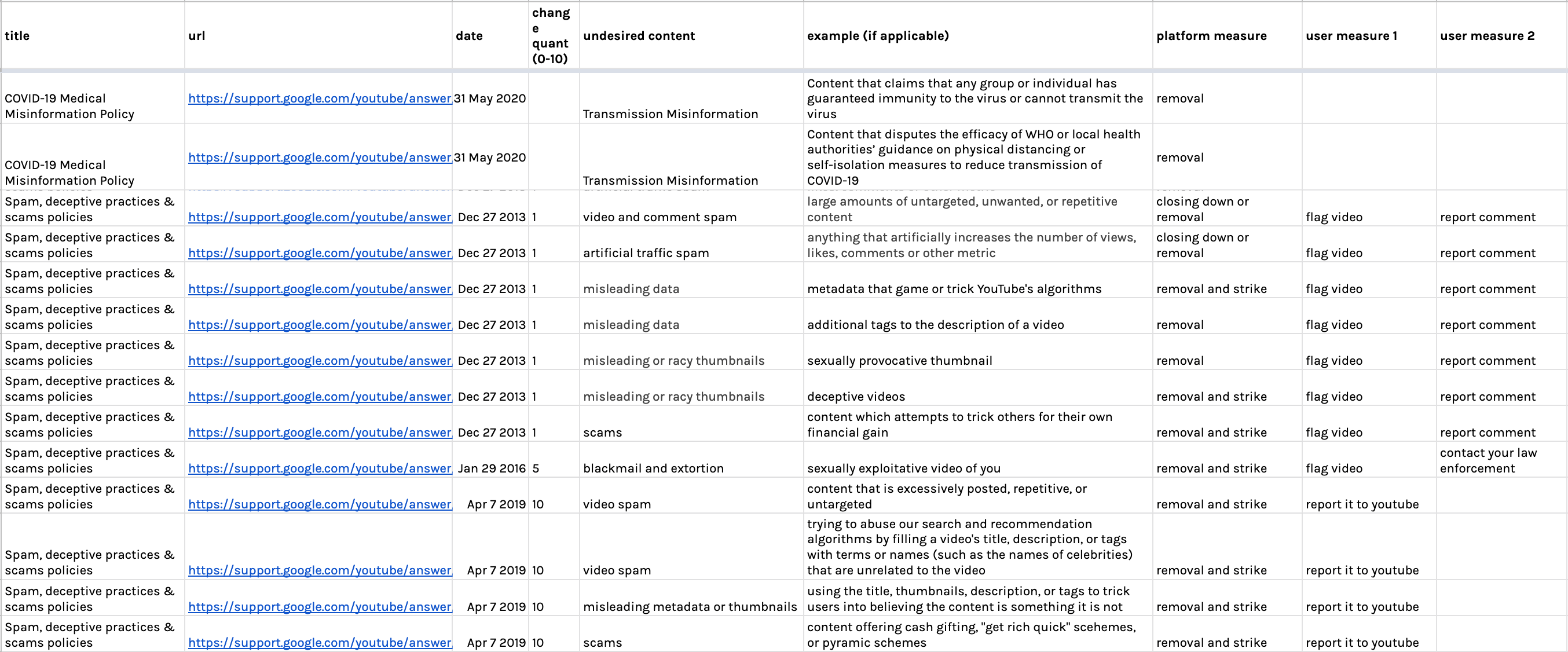

To examine how YouTube ’s content moderation techniques have adapted to the spread of COVID-19 misinformation, we used the Wayback Machine’s Changes and the DMI screenshot tools to select and collect significant HTML changes. Close reading resulting screenshots, we coded (a) undesired contents YouTube mentions (e.g., “comment spam”) and (b) measures used to combat them, distinguishing platform from user-driven techniques. We also registered other metadata, such as content moderation titles; date of change; amounts of change; examples of undesired contents; and additional user measures. Figure. 1. Example of metadata collected from the Wayback Machine’s Changes tool

Figure. 1. Example of metadata collected from the Wayback Machine’s Changes tool

We laid out each instance of significant change, per content moderation type, in separate timelines. In these timelines, we highlighted the undesired information listed by YouTube, as well as the platform or user-driven techniques used to counter it.

In addition, we made screencasts out of all collected content policy screenshots. A “screencast documentary” approach makes possible a narration of particular histories of the web (Rogers, 2018, 2019). Our screencasts replace the usual audio description of changes with written text.

5.2. Assessing YouTube ’s content moderation of COVID misinformation: demotion

As we understood the importance of demotion for YouTube to counter misinformation (particularly from 2019 onwards), we relied on Rieder (2020) to measure the effects of this technique in moderating the channels posting COVID conspiracy videos we collected. The policies we examined reveal that YouTube uses demotion by “promoting” or up-ranking “authoritative contents”, such as news media and other “trusted sources”. YouTube also claims that “borderline contents” — contents that defy clear-cut qualifications as “acceptable” or “unacceptable” — are usually buried down search and recommendation results.

Correspondingly, we first coded COVID conspiracy channels per authority and “borderline” quality. Our coding was of course approximative: we do not know how YouTube performs this coding internally. Based on its own terminology, we defined “authoritative contents” as news media (e.g., FRANCE 24 English); medical sources (e.g., University of Michigan School of Public Health); government (e.g., Australian Government Department of Health); and research (e.g., University of Birmingham — without any further medical specifications). Of 1059, there were a total of 539 authoritative channels.

Contents were identified as “borderline” if pertaining to networks of conspiracist channels, which were identified by members of the project COVID-19 Conspiracy Tribes Across Instagram, TikTok, Telegram and YouTube (see the YouTube Method section). Of 1059, there were a total of 519 borderline channels.

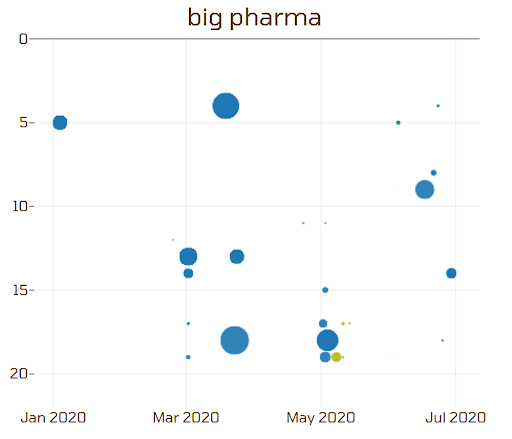

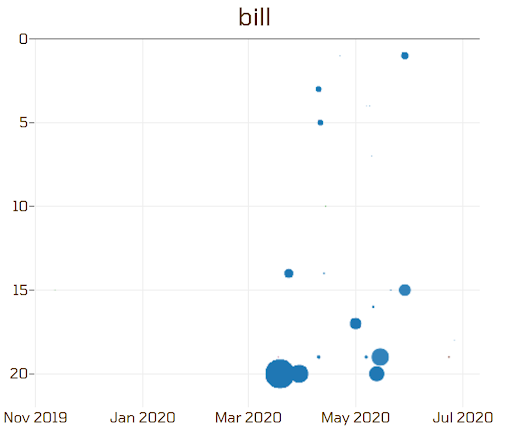

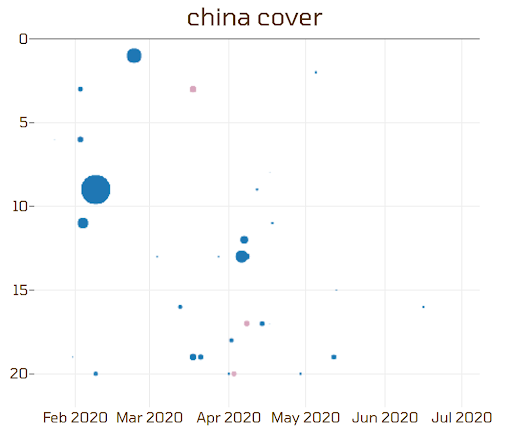

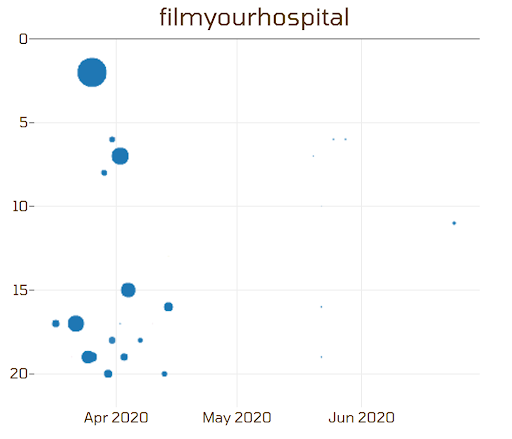

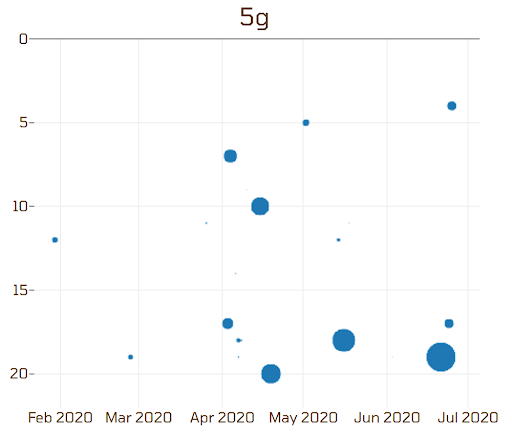

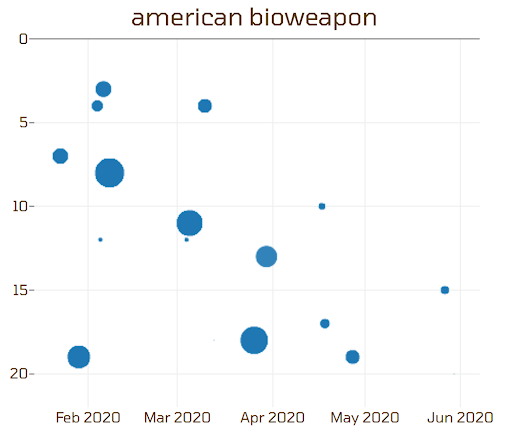

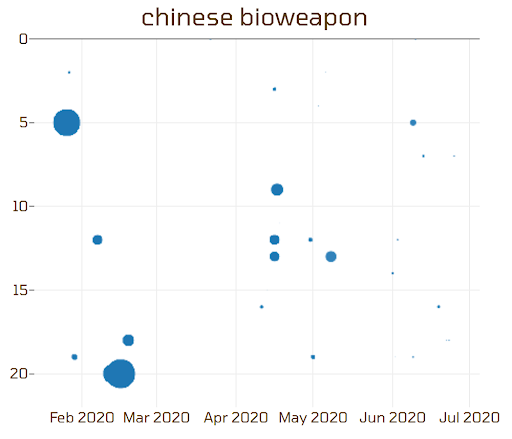

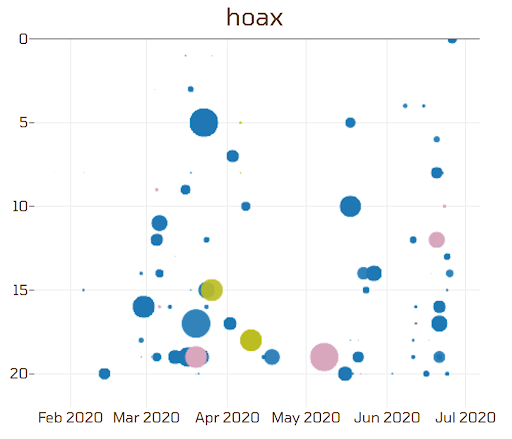

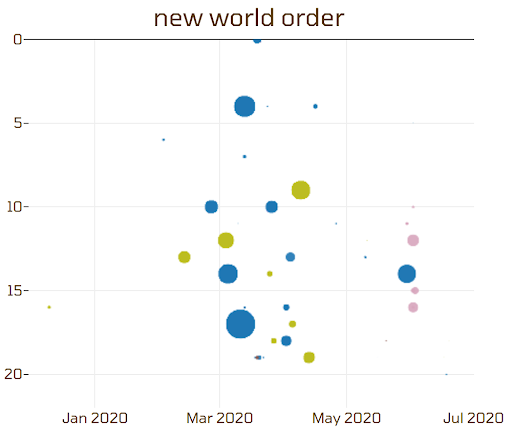

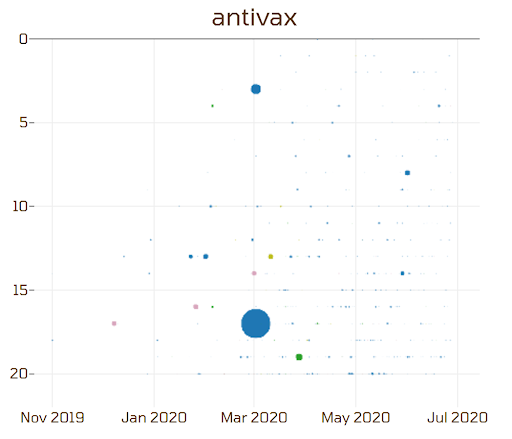

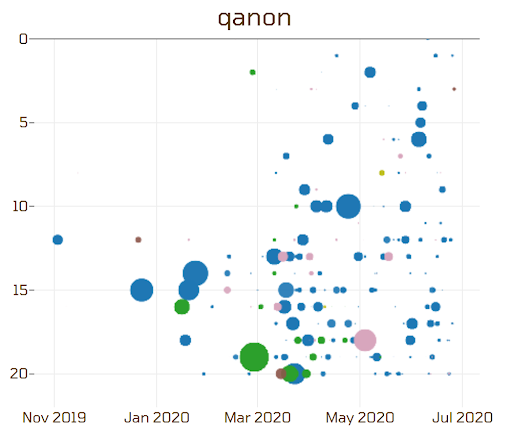

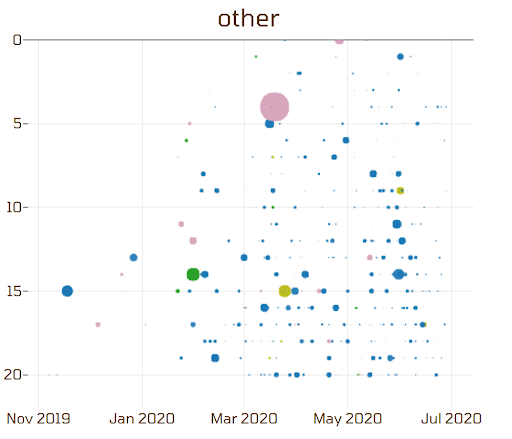

To trace the demotion of videos by borderline channels, we used YouTube search rankings metadata. In 11 scatterplots, we visualised the ranking position of result per (1) authoritative and borderline quality and (2) query (see Initial Datasets).

We also know that engagement metrics bear a certain weight in rankings of search results. YouTube conference proceedings (Davidson et al., 2010; Convington et al., 2016) and content policies claim that rankings are determined (in part) by video popularity, which is itself partly measured by number of views. We indicated view counts with dot size, with bigger dots indicating videos with more view counts.

5.3. Assessing YouTube ’s content moderation of COVID misinformation: deplatforming

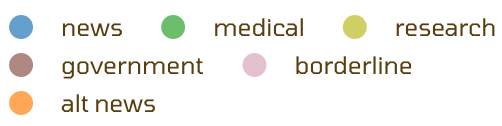

We used YouTube DL to capture the status of queried videos overtime every day between the end of March and June, 2020. In doing so, we were able to find an approximate date during which videos were no longer available, and why. This allowed us to: (1) trace the availability of videos over time (Figure 4); and (2) examine the reasons given by YouTube to remove videos in context with the platform’s anti-misinformation policies (Figures 2, 3).

Lastly, we also compared the transcripts of unavailable and available videos. Our intention was to determine what false claims and other information would make videos vulnerable to deletion. While we could have performed text diff analysis between available and unavailable videos, we chose to filter texts by the types of undesired information YouTube mentions in their COVID misinformation policies. These are:

-

treatment misinformation;

-

prevention misinformation;

-

diagnostic misinformation;

-

and transmission misinformation.

Here, we extracted a list of words or a “dictionary” from video descriptions that account to one or a combination of these four types of misinformation (table X). With this dictionary, we were able to automatically label video transcripts accordingly.

| treatment misinformation | prevention misinformation | diagnostic misinformation | transmission misinformation | other |

| hoax | religion; religious | symptoms | chinese food | bioweapon; |

| hysteri; hysteria | pray; prayer | epidemic; epidemica; epidemiology | 5G; 5gee; | military world games |

| sabotage | god | flu | radiation | bill gates |

| vaccination; vaccine; vaccines | jezus | test; testing | poison; poisoning | gates foundation |

| hydroxychloroquine | vitamins | masks | informed consent | event 201 |

| toxic; toxines | immune system | sickness | agenda 21 | |

| faith | immune; immunization | fema | ||

| pray; prayer | boost | qanon | ||

| choice | weather | qanon | ||

| #FilmYourHospital | cabal | |||

| medicine | trust the plan | |||

| storm | ||||

| great awakening | ||||

| nwo; | ||||

| plandemic | ||||

| wuhan virology lab; wuhan lab; chinese lab | ||||

| cover-up; | ||||

| deep state; deepstate | ||||

| big pharma; bigpharma | ||||

| madonna |

Table 2. Dictionary of terms categorized according to the types of COVID-19 misinformation as provided by YouTube.

5.4. Mapping the “replatformisation” of deplatformed contents: the case of Plandemic

To determine where a video was re-uploaded or “replatformed”, we first needed to specify what we intend by “replatforming”. We determined that a video gets replatformed in both a technical and figurative sense. On the one hand, a video gets re-uploaded when the nearly exact audiovisual content is accessible in another website under the same or a different title. On the other, a video is “replatformed” in the sense that it (or the mention of it) (re)gains popularity or engagement after it is no longer accessible in its original upload site, particularly someplace that afforded it considerable engagement.

This “Streisand effect” — when banned contents gain popularity by virtue of being banned — implied that we also examine amounts of engagement on deleted contents even in the platforms that have banned it. As such, we measure engagement with both formal metrics — views, (dis)likes — and “chatter”, i.e., amounts of YouTube comments and Facebook, Reddit, TikTok, 4chan/pol and Instagram posts that mention Plandemic by name or by alternate URLs. We found alternate URLs by querying YouTube titles of the Plandemic documentary on DuckDuckGo and Bing: “PLANDEMIC DOCUMENTARY THE HIDDEN AGENDA BEHIND COVID 19”; “Plandemic Documentary The Hidden Agenda Behind Covid 19”; “The Hidden Agenda Of Covid-19 (Plandemic Documentary)”; “Plandemic”. We also checked the Bitchute and Youtube dataset for videos containing the word “Plandemic” and “Hidden agenda” in the title.

We manually captured engagement numbers of replatformed Plandemic videos from (a) its alternate URLs (collected as of July 1st, 2020) or (b) from archive.org or archive.is. To visualise the “replatformisation” of Plandemic videos and their engagement rates over time, we distributed the number of views across the number of days the video was online before July 1st. Because views are unlikely to be distributed equally, our visualisation does not fully represent the engagement rates of replatformed Plandemic videos.

Our case study on Plandemic met 3 significant limitations: (1) sampling; (2) the limitations placed by deplatforming itself; and (3) a shortage of data on view counts from alternative platforms. First, because of presumed title changes and translations, not all Plandemic videos were found on alternative platforms. Second, deplatforming Plandemic also makes it impossible to find some of its original video metadata. This means we had to rely on third parties for information on early copies (e.g., dates and views), such as archive.org. Here, too, we only found a selection of replatformed versions of Plandemic. Lastly, in order to map the “replatforming” of Plandemic, we mostly relied on platforms that afford view counts, like Bitchute and YouTube. As such, this approach dismisses uploads on file sharing, torrent and original Plandemic websites (e.g., plandemicvideo.com).

5.5. Comparing content moderation of COVID misinformation with the content moderation of hate speech on YouTube

To compare the effects of YouTube ’s anti-misinformation and anti-hate speech moderation, we again sought to grasp the function of YouTube ’s anti-hate speech policies. Here, too, we used the Wayback Machine’s Changes tool to trace a short history of YouTube ’s anti-hate speech policies (see Section 2.1.). We extracted the undesired contents listed in policies overtime, as well as the techniques YouTube uses to restrain hate speech.

We also questioned how YouTube adapted to ongoing debates about social and other identities. Beside capturing information YouTube deems problematic, we extracted specific groups the platforms mentioned as vulnerable to hate speech. This helped us make sense of changes in the affordances of content moderation techniques, from, for example, user-lead initiatives (flagging, reporting) to more platform-driven, “top-down” measures (banning, suspension). We recreated a timeline and screencast with this information.

To examine the effects of YouTube ’s anti-hate speech moderation, we used a dataset of comments and transcripts of about 1500 left and right-wing YouTube channels from 2008 up to late 2018, still largely untouched by recent deplatformings. This dataset was curated by Dimitri Tokmetsis, political reporter at the Dutch newspaper De Correspondent (Tokmetzis, Bahara and Kranenberg, 2019). Using YouTube DL, we verified the channels that were no longer available after the end of 2018 and extracted their current (offline) status (e.g., “This channel was suspended for hate speech violations.”). Doing so helped us understand the motives for removing channels at a particular point in time, as well as measure the proportion of channels suspended for hate speech.

We also sought to detect the kinds of hate speech these channels were suspended for. We found that YouTube ’s anti-hate speech policies changed considerably between 2018 and 2019, in a time when online debates about what constitutes hate speech and on what grounds minorities are susceptible to it were prominent. We can hypothesise that mysogynistic, antisemitic and other extreme speech may have gone unnoticed before debates around MeToo (2018) and the prominence of “alt-right” political culture on YouTube (2019) made them publicly notorious. It was important, in this sense, to determine what kinds of speech became more penalised in the context of public debates.

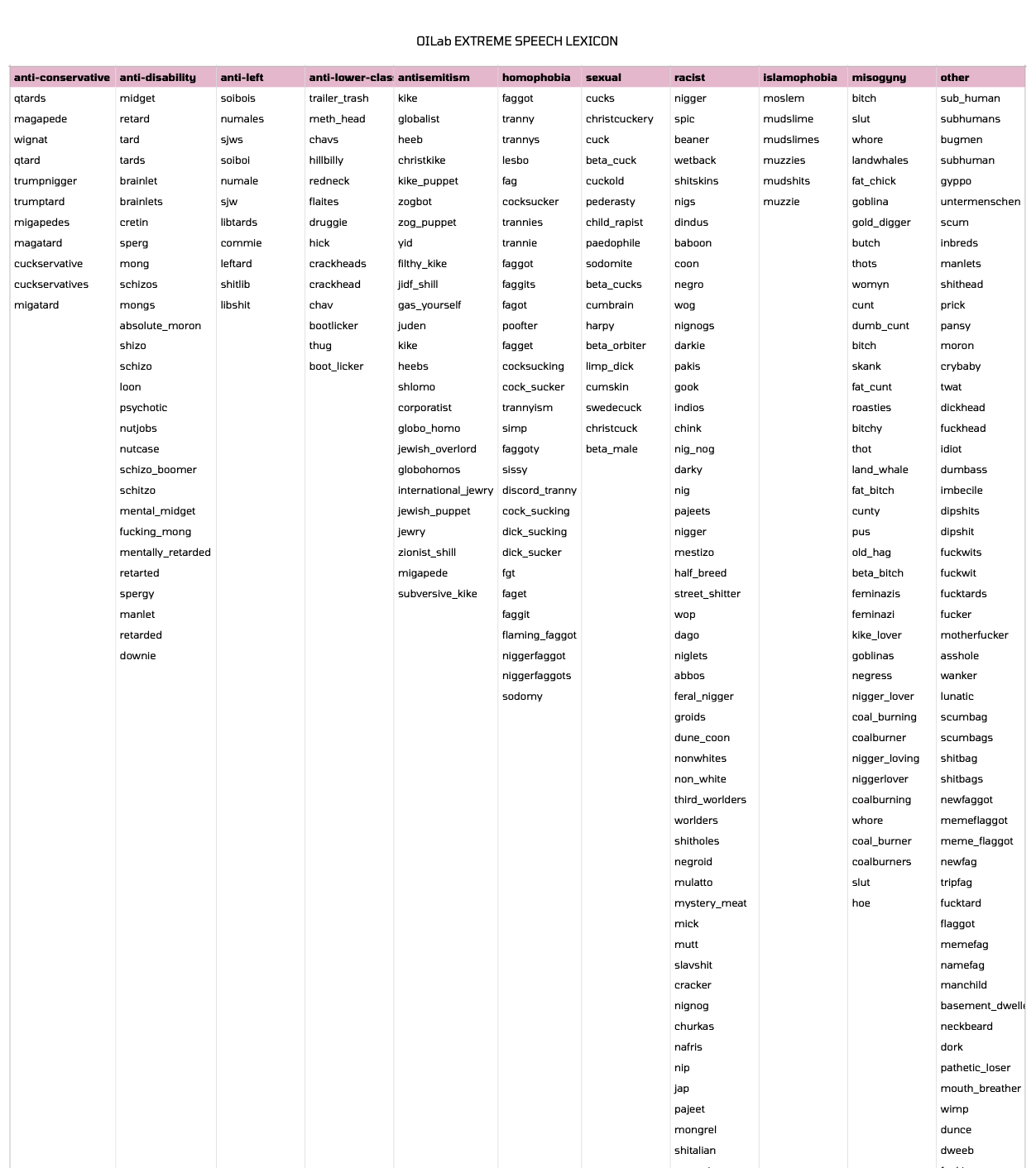

After determining that channels classified as “right-wing” were mostly penalised for hate speech, we filtered the transcripts of a total of 958 channels with OILab’s extreme speech lexicon (Table 3). We used this lexicon to measure the prominence of different kinds of hate or extreme speech over time. In doing so, we were able to measure the effects of content moderation policies on the spread of hate speech on YouTube between 2008 and 2020, and compare these results with our equivalent findings on COVID-19 misinformation.

Table 3: OILab Extreme Speech Lexicon. Source: Peeters, Hagen and Das, 2020

6. Findings

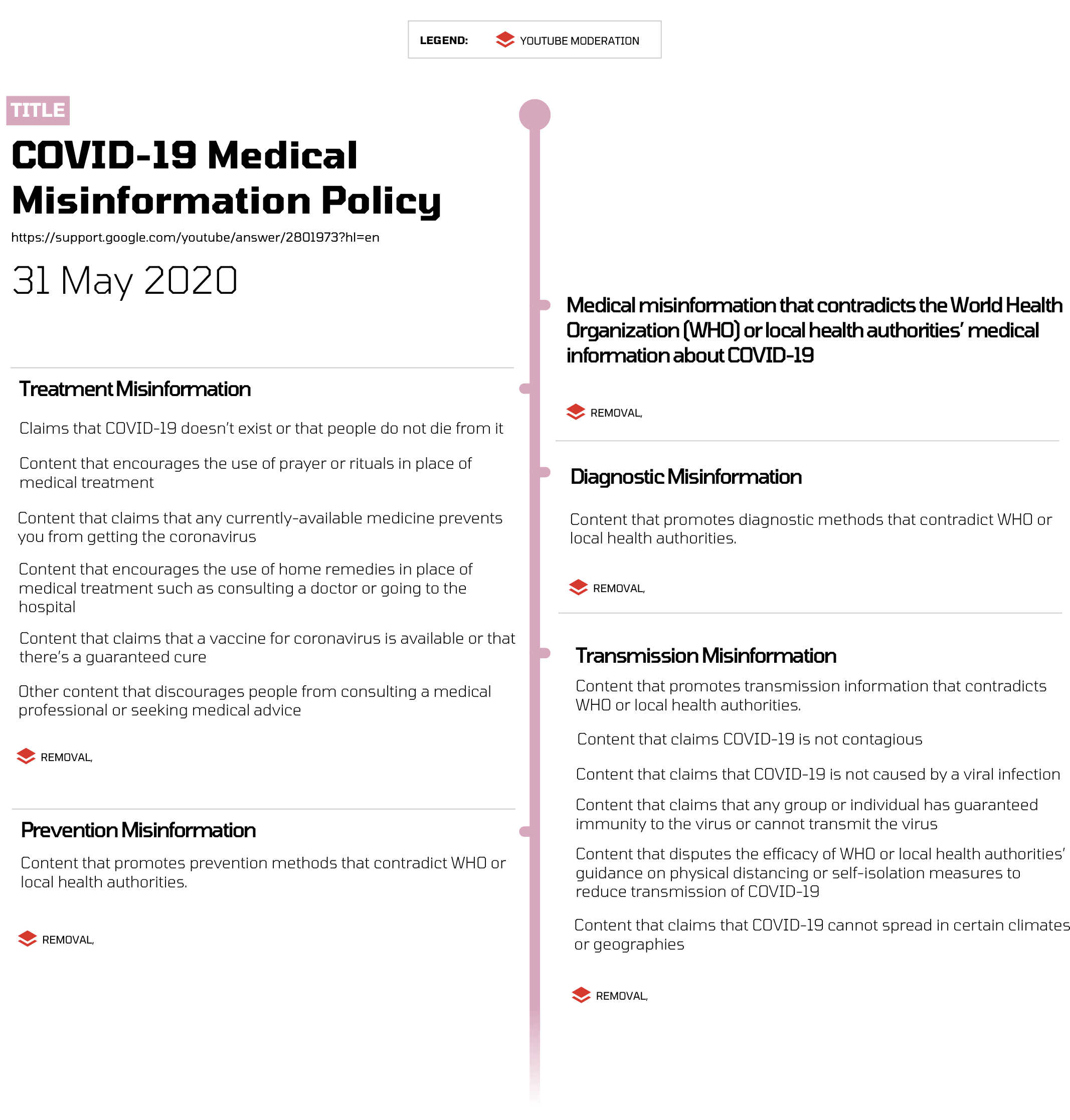

6.1. Compared to its anti-hate speech policies, YouTube has long had a more top-down moderation of misinformation

Like Facebook and Twitter, YouTube has put forward a policy that is specifically designed for COVID-19 misinformation (2020). This policy was inaugurated from early March 2020 and has seldom changed. From the beginning, it deems unacceptable misinformation on transmission, diagnostics, treatment, and prevention (see Figure 2), as well as any other misinformation that contradicts World Health Organisation guidelines. The reason why it frames such information as undesirable is because they may bear a direct impact on users’ health, which may indeed explain why this policy stands out as more strict than its general misinformation and hate speech policies. Also notable is the centralisation of misinformation guidelines around the World Health Organisation, which counters the popular idea of “platforms” as meta-aggregators of sparse, de-centralised contents and usage guidelines.

Figure 2. Overview of the YouTube policy specifically related to COVID-19 misinformation.

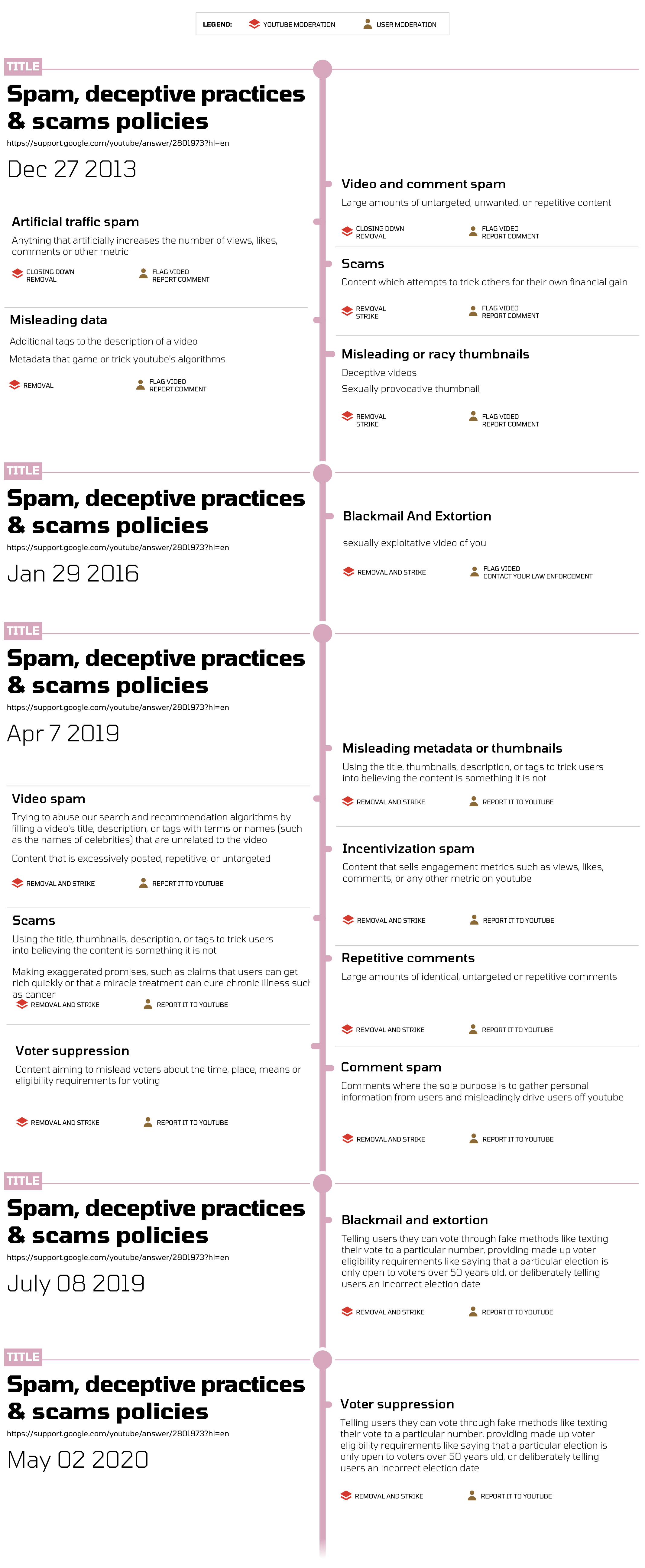

Contextualising this specific policy brings us back to YouTube ’s general misinformation policy, entitled Spam, deceptive practices and scam policies. We see, here, that “misinformation” is a new term for YouTube ’s content moderation policies (2020); indeed, it is only phrased as such in the COVID-19 policy, inaugurated in March 2020. Spam, deceptive practices and scam policies deal specifically with what the platform deems “misleading content”, such as false video metadata, as well as blackmail and extortion among users. In this sense, the platform’s early definition of falsehood ties mostly to artificial usage of the platform, be it for benign or harmful motives.

Figure 3. Timeline of the YouTube policy regarding misinformation in general (2013-2020).

Both policies — on COVID and misinformation (spam, deceptive practices and scam) in general — mention two types of content moderation techniques. The first is removal or suspension. YouTube has consistently sanctioned spam, deceptive practices and scam with “top-down” moderation techniques, such as content removal and “channel strikes” (a progressive suspension of channels). The second is demotion. In a separate blog post, YouTube mentions that it tackles anti-COVID-19 misinformation policy with both removal and “raising up authoritative contents” on search and recommendation rankings. The latter technique indicates that YouTube may be throwing caution at framing misinformation in terms of objective truth. Conspiracy theories and hyper-partisan information may indeed defy this qualification, calling for more subtle techniques to evaluate their objective value in relation to news sources, research, governments and their industry standards.

6.2. In comparison to hate speech, content moderation techniques struggle to identify and remove “borderline information”

6.2.1. “Borderline information” such as conspiracies is not easily detectable, and thus removable

The application of YouTube ’s content moderation policies to the COVID-19 misinformation video dataset with information on video uploads, videos that are still online and banned videos showed that the introduction of different content moderation techniques have not caused any major changes in the removal of content on the platform (Figure 4). Even techniques that were already introduced in 2019, such as demoting and the removal of so-called “borderline content” — content that is not clearly defined as true, false or hateful — are not found to have caused significant changes in the ratio of removed content versus the number of uploaded videos related to COVID-19 misinformation.

.png)

Figure 4. Uploaded and unavailable video ratio in relation to content moderation policies over time.

6.2.2. Demoting complex conspiracies (QAnon, deep state) is less effective than demoting more specific COVID-19 conspiracies

Figures 5 details the different types of content returned through our set of queries (see Method) and shows how the search algorithm modifies their placement to “bury” or “raise” different types of content. From these graphs, we can see that conspiracy theories with richer, more historical backgrounds (such as ‘Qanon’ and ‘new world order’) have a higher variance in content than those conspiracy theories exclusively cropping up in relation to COVID, indicating the ways in which COVID conspiracies have piggybacked onto historical conspiracy narratives.

|  |  |

|  |  |

|  |  |

|  |  |

Figures 5. Scatter plots of query results over time. Y axis: search ranking; X axis: date; node: video; node size: video viewcount. Source: YouTube. Key below.

6.2.3. Conversely, specific information, such as falsehoods on COVID-19 transmission, prevention, diagnostics and treatment, is more easily defined and detected, and thus removed

The categorisation of available and unavailable content according to the curated list of dictionary terms related to COVID-19 misinformation (Table 2) shows that indeed, a significant number of videos that are no longer online are videos that reflect misinformation on treatment (mainly vaccinations) and prevention (specifically related to immunity). These categories and their examples were also two specified subjects in the original content moderation policy provided by Youtube on May 31 2020 (Figure 2).

Figure 6. Keywords used by videos banned and available videos on YouTube.

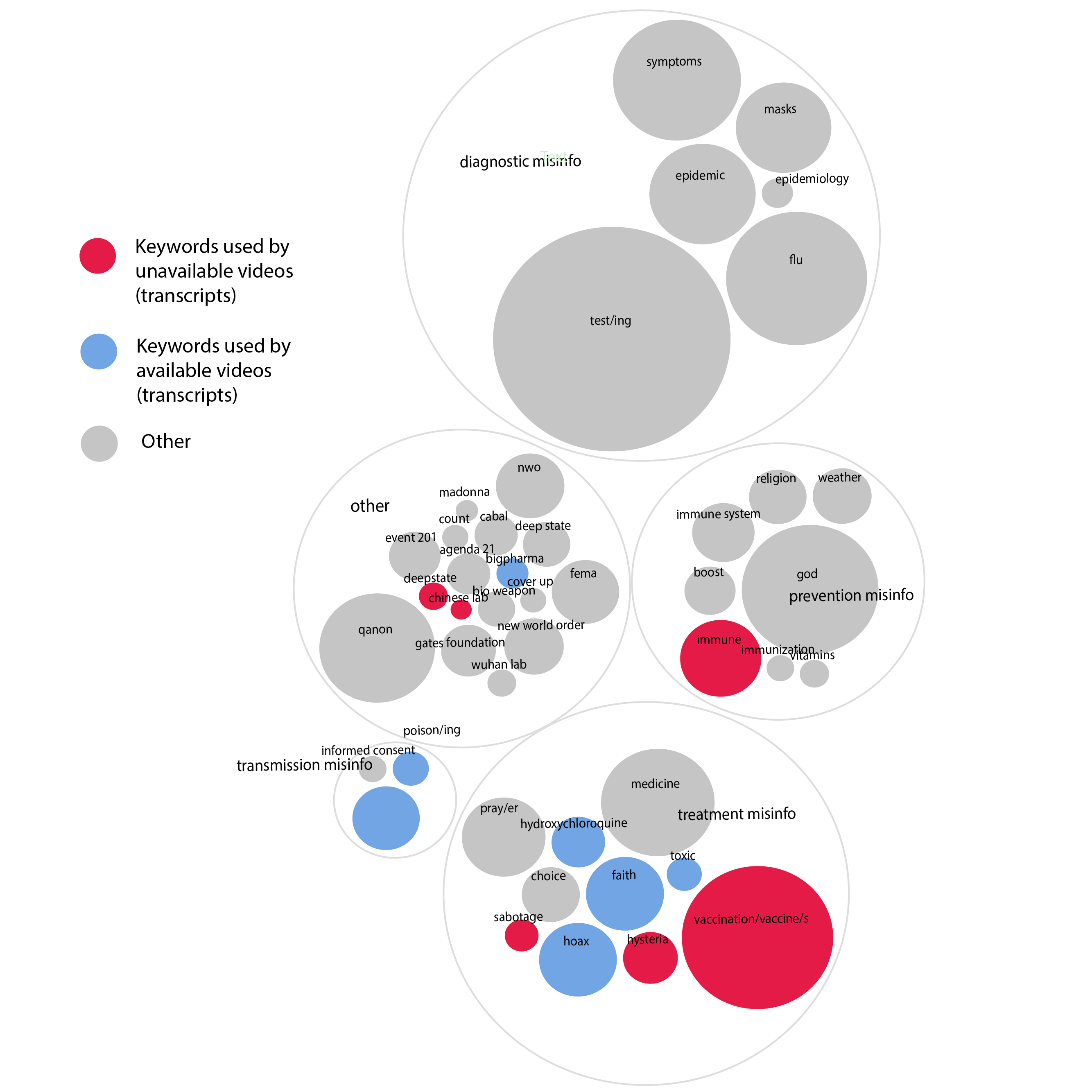

6.2.4. YouTube -stated motives for banning videos reflect the platform’s difficulty in defining and detecting “borderline information” over hate speech

We also found that the motives for banning COVID-19 videos are not immediately clarifying. As shown in Figure 7, most banned videos have been removed for violating YouTube ’s community guidelines; because its account has ended; or because the video in question is simply unavailable. Only one small amount of videos have been removed for specific reasons, namely for inciting hatred, involving violence or harassment, or because of copyright claims.

Figure 7. Reasons behind video banning on YouTube, detected between June 16 and June 17, 2020.

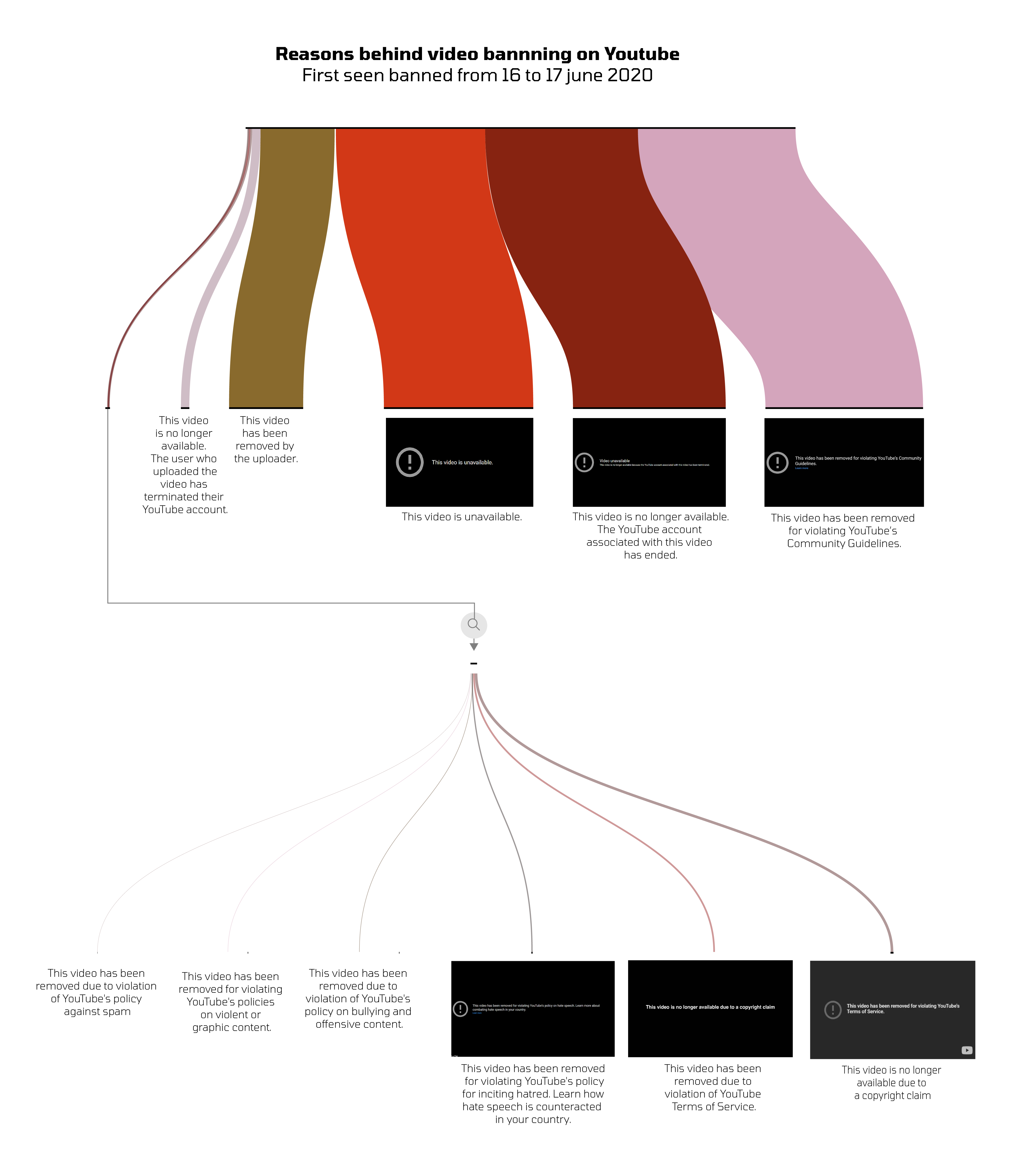

6.3. YouTube has had a significant role in the user engagement and circulation of the Plandemic video across the web

6.3.1. Plandemic received more views across YouTube, Vimeo and alternative sources when available in the former, suggesting that YouTube and Vimeo still hold a monopoly of attention

Figure 8 shows that the Plandemic video has appeared on other platforms relatively at the same time as on YouTube. Upon being banned from Vimeo and YouTube around May 10th, the video continues to make its way on alternative sources, such as Bitchute, Banned, Ugetube and the Internet Archive. Though they are only estimates, the number of view counts was significantly higher when the video was available on YouTube and Vimeo. Views diminish when YouTube and Vimeo take the video down, though they fragment across alternative sources.

Figure 8. Beeswarm graph, where every dot represents a ‘Plandemic’ mirror that is online that day. The area of the dot is proportional to the estimated views of that video that day.

It is important to note parts of Plandemic were still available on YouTube after deplatforming, suggesting that the removal of the video has not been absolute. Also, the number of videos (represented by the number of dots) is slightly misleading, as total views on YouTube and Vimeo have far more views than their counterparts combined.

6.3.2. YouTube ’s deplatforming of Plandemic has caused a rise of engagement on the video across platforms, though it decreases it on the long run

As is visible in Figure 9, users had already mentioned the word ‘plandemic’ before the video was published on YouTube, notably on Instagram. In other platforms, user engagement takes off around the time the video is published on Youtube and Facebook.

YouTube removes the original video on May 5th, as do mirror websites on May 6th and 7th. Engagement increases precisely at that time, up until May 7th. This could indicate a short “Streissand effect”, or a usual cycle of virality.

After May 7th, engagement decreases on all platforms. While this is to be expected on actively moderated platforms (Youtube, Instagram, Facebook, Reddit), the same effect happens on relatively unmoderated platforms (4chan, Gab). This suggests that YouTube ’s deplatforming has had a strong effect on user engagement across the web.

Figure 9. Number of items containing the word ‘Plandemic’ on different platforms over time.

6.4. Content moderation of COVID misinformation vs. the content moderation of hate speech

6.4.1. YouTube ’s hate speech policies have been contingent on debates about hate speech and identity

As Figure 11 shows, hate speech policy has been highly susceptible to changes relative to platform user debates on hate speech and identity. From 2019, YouTube has frequently changed the list of identities it deems vulnerable to discrimination, progressing, for example, from “race and ethnicity, religion, disability, gender, age, gender identity or sexual orientation, and veteran status” to “age, caste, disability, ethnicity, gender identity, nationality, race, immigration status, religion, sex or gender, sexual orientation, victims of a major incident and their kin, and veteran status”. YouTube does not always make the distinction between “gender”, “sexual orientation” and “sex”; indeed, it states on March 12th, 2019, that it refers to “gender, sex and sexual orientation definitions mindful that society’s views on these definitions are evolving.”

As it begins to outline concrete examples of what accounts as hate speech, YouTube also distinguishes “race”, “ethnicity”, “nationality” and “immigration status” variably. On August 26th, 2017, it clarifies that “it is generally okay to criticise a nation-state, but if the primary purpose of the content is to incite hatred against a group of people solely based on their ethnicity … it violates our policy.” From 2019, it specifies that hate speech accounts for using “racial, ethnic, religious and other slurs where the primary purpose is to promote hatred”, using “stereotypes” and “dehumanising individuals”. Coincident with the rise of discussions about race and intelligence (Lewis, 2018), YouTube begins to explicitly sanction “statements that one group is less than another based on certain attributes, such as calling them less intelligent, less capable, or damaged”.

The introduction of “victims of a major violent incident and their kind” was concurrent with a widely commented blog post dating from March 2019, where YouTube revealed more decisive steps to combating antisemitism. At the time, it removed thousands of Nazi, white supremacist and World War II footage (OILab, 2019). This is the only instance where YouTube draws a clear line around ideological and conspiratorial contents, mentioning Holocaust revisionism, “videos that promote or glorify Nazi ideology” and conspiracies around the Sandy Hook Elementary shooting.

This blog post was coincident with the removal of a majority of right-wing channels for using hate speech. Upon looking at the channel status of all 1500 channels of Tokmetsis’ YouTube dataset, we found that channels that have been categorized as “right-wing” have been more frequently removed for hate speech — regardless of their view count — while channels defined as “left-wing” have been deleted for so specific reason (Figure 10).

Figure 10. Banned YouTube channels after the end of 2018. The channels are divided into two categories of political orientation: left-wing and right-wing.

6.4.1. YouTube ’s hate speech policy has moved from user-lead to platform-lead content moderation

The most striking policy change is perhaps YouTube ’s decision to delete “channels that brush up against our hate speech policies”. This sentence reflects the proactive, “top-down” techniques it has begun using from 2017, such as removal, channel strikes, and suspension from the YouTube Partner Program. Such techniques signal a clear departure from largely user-lead measures, such as flagging or reporting.

Figure 11. YouTube ’s anti-hate speech policy, 2013 - 2019.

Top-down measures bore striking effects in the presence of extreme speech on the platform. Up until 2019, it was users who bore responsibility (and affordances) to report and flag hate speech. These measures appear to have been largely ineffective. After the implementation of top-down removal, channel strike and suspensions from the YouTube Partner Program (Figure 11) in March of 2019, the number of channels using extreme speech has dropped significantly. This decrease is partly explained by the removal of 348 channels in March of 2020 (Alexander, 2020). Compared to moderating “borderline contents” such as COVID conspiracies, these results seem to suggest that hate speech is more easily detectable, and thus moderated.

Figure 12. Frequency of extreme speech terms in 948 right-wing YouTube channels.

7. Discussion

In the past half-decade, Facebook, Twitter and YouTube have taken on a more proactive role of moderating their user base. This strategy has in most cases constituted a reaction to user harassment campaigns (Jeong, 2019), fake news-mediated dissemination of conspiracist narratives (Venturini et al., 2018), and inter-ethnic violence up to and including genocide (Mozur, 2018). Though some have spotted positive results (Newton, 2019), this approach invariably places platforms in fundamental debates as to what constitutes true, false, good and bad information, be them “mainstream” and “alternative” conceptions of current events or criteria for what constitutes an offence to racial, gender, and other identities.

7.1. Misinformation: from deceptive practices to borderline contents

Through the combined historical analysis of content moderation techniques, the moderation techniques related to COVID-19 misinformation and their effects, this study found that since 2013, YouTube has applied top-down techniques to fight false contents. The term “misinformation” is however quite new — and to a certain extent more controversial — in its repertoire of content moderation policies. The closest translation of this concept brings us back to Spam, deceptive practices and scam policies, which to this day aim to suppress false and actively harmful usages or appropriations of the platform, such as: placing false video metadata; gaming the recommender system; posting the same contents repeatedly; or involving users in scams, blackmail and extortion. Moderating these practices implies enforcing protective measures for users and YouTube itself. Harmful practices were from the onset clearly defined and suppressed by YouTube, rather than being (mostly) up to users to flag or report.

The term misinformation appears in conjunction with the concept of borderline contents in March of 2019. In a separate blog post, YouTube mentions that it has placed measures to combat two types of information: conspiracies or “borderline contents”, and hate speech in connection to Nazi and white supremacist ideologies. Though “borderline contents” is not clearly defined, YouTube uses conspiracies as an example, such as claiming that Sandy Hook did not take place. The problematisation of conspiracies as “borderline content” appears to be particularly difficult in this sense, as, unlike hate speech, they cannot be summarised into specific “slurs” and other detectable vernaculars.

One of its remedies against this type of content is to “demote” or bury down search and recommendation results in favour of “authoritative” or “trusted sources”, such as reputed news sources and other outlets upholding industry standards of factual objectivity. But as we tested the effectiveness of demoting on COVID conspiracies, we noted that more complex narratives around the deep state, QAnon and COVID being a hoax included both “authoritative contents” and conspiratorial channels in upper search rankings. This difficulty could be explained by the fact that conspiracies account for more general worldviews, and cannot, unlike hate speech, be detected with a preset dictionary and list of doubtful sources. As evidenced in the project COVID-19 Conspiracy Tribes Across Instagram, TikTok, Telegram and YouTube, conspiracy tribes consume both authoritative and fringe sources of information, and can be in dialogue with user clusters and contents that are not necessarily part of fringe political subcultures. Such findings suggest that the detection and moderation of misinformation appears to be more complex than hate speech, and raises questions regarding the contextualisation of specific misinformation within broader societal debates.

7.2. Hate speech detection: between moral imperatives and contingent societal debates

YouTube’s shift from user-lead anti-hate speech moderation to top-down, platform-lead decisions signals an important shift in the way that YouTube understands and moderates hate speech. The implementation of top-down measures may explain the dramatic drop of extreme speech “utterings” from March 2019 onwards. These findings also suggest that, as dictionaries, extreme speech may be more easily detected and suppressed. (Still, the “purge” of Nazi and white supremacist contents in March of 2019 was not free of collateral damage, as even World War II footage from municipal archives were accidentally deleted (Waterson, 2019)).

The introduction of new measures to fight borderline and hate speech in March accounts for a unique moment, as this is the first time that YouTube problematises a particular political culture. In the case of hate speech, for example, it justifies suppressing Nazi and white supremacist contents because it is “by definition discriminatory” of different identities (YouTube, 2019). This justification is still problematic to a user base who oppose top-down decisions in their eyes sympathetic to “identity politics”, arguing that any distinction of identities — even for progressive causes — substantiates discriminatory (i.e., differential) views of human identity (Miles, 2018). One could argue that, in the context of these debates, this is an instance in which YouTube uses conceptual (rather than technical or legal) resources to adjudicate on its user base and the contents they post, thereby making itself vulnerable to political consumer choices.

7.3. Replatforming contents and audiences

One may argue that the apparent “politicisation” of YouTube ’s content moderation policies may make the platform vulnerable to losing its reputation as a platform. In choosing to no longer permit disputed contents such as conspiracies (or even, in some eyes, extreme political ideas) it may cease to be chosen by users for its “completeness” — a breadth and amount of contents that earlier “neutral” content moderation policies afforded. This is evidenced by the presence (and popularity) of messaging boards such as 4chan/pol, whose liberal content moderation policies complement more policed mainstream platforms.

Still, as shown in our analysis of the replatforming of the Plandemic documentary, YouTube still holds a crucial role in both the access and engagement with banned contents. Plandemic videos gain a much smaller audience than it did on all mainstream platforms. Deplatforming is in this sense ineffective in banning the video, but is far more effective in depriving the video of an audience.8. Concluding summary

This research set out to map the deplatforming, demotion, suspension and other measures specified by YouTube ’s anti-COVID misinformation policies and has assessed the effectiveness of these techniques by tracing deplatformed and demoted contents across YouTube and alternative online spheres over time. Furthermore it compares these results with hate speech moderation on YouTube, and the impact of these moderation techniques for the circulation of such content over time.

The rapid spread of highly engaging COVID-19 misinformation and conspiracy theories caused significant challenges for content moderation on platforms, which are increasingly removing content in a more proactive fashion. Focusing specifically on YouTube, we sought to trace content moderation policies put forward by the platform over time, as well as understand the effects of content moderation techniques like demotion and deplatforming on user engagement with COVID-19 misinformation on YouTube and alternative platforms.

Our historical analysis showed that YouTube content moderation techniques have changed in relation to present-day debates about identity and misinformation, and have, in the case of hate speech moderation, shifted from a user-based to top-down removal of problematic contents. However, it is still more difficult for top-down measures to detect (and define!) “borderline contents”, be it for demotion or deplatforming techniques.

As did Rogers before us (2020), we found that deplatformed contents have appeared on numerous alternative platforms. We see, in this sense, an increase of diversification of alternative sources, relative to a decrease of engagement in deplatformed contents. In this sense, YouTube still holds a monopoly of attention over the web, though it does face an increasingly diverse (and still fractured) ensemble of competitors.9. References

-

Mozur, P. (2018) ‘A Genocide Incidet on Twitter, With Posts from Myanmar’s Military’, NY Times.

- World Health Organization (2020) Novel Coronavirus(2019-nCoV). Situation Report 13. World Health Organization.

- YouTube (2019) ‘Our ongoing work to tackle hate’, Official YouTube Blog. Available at: https://youtube.googleblog.com/2019/06/our-ongoing-work-to-tackle-hate.html (Accessed: 17 December 2019).

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback