You are here: Foswiki>Dmi Web>WinterSchool2018>WinterSchool2018CollaborativeArchivingDigitalArt (04 Mar 2018, MeganPhipps)Edit Attach

Collaborative Archiving Digital Art

Team Members

-

Annet Dekker (University of Amsterdam / aaaan.net)

-

Claudia Roeck (University of Amsterdam)

-

Dusan Barok (University of Amsterdam / monoskop.org)

-

Julie Boschat Thorez (researcher / artist)

-

David Gauthier

-

Judith Hartstein

-

Megan Phipps

-

Larissa Tijsterman

-

Jim Wraith

Contents

Summary of Key Findings

Briefly describe your most significant findings.

1. Introduction

The authors present their ongoing research on distributed and decentralized platforms to supplement standard collections management databases for art documentation, in particular for networked and processual artworks. The documentation and process of these types of artworks do not fit well in standard database applications, due to the inflexibility of the standard applications. While the standard can be adjusted to specific needs since most applications are developed by commercial companies this kind of flexibility comes at a price. Trying to get away from these proprietary systems and more importantly looking for a solution that can easily be shared and (re)used by others, we focused on open source alternatives. As an interdisciplinary team of a conservator, students, researchers, artists and programmers, we spent a week to explore and compare the functionalities of two version control systems: MediaWiki and GitLab. As source material, we used research and documentation material from the artwork Chinese Gold by UBERMORGEN. In this report we reflect on the technical details of the different systems, their pro’s and con’s for documenting the material while looking at the potential of collaborative workflows.

Networked / Processual art

New media art, digital art, software art, networked art, Internet art, net.art, networked art, post-Internet, new aesthetics… Over the past decades, many terms have been used to signify contemporary art that works with networked media (Dekker 2018). Rather than giving a definition for the artworks that we are interested in, we will describe the main characteristics that are important for our current research: being networked and processual. These features are not a priori technical; they connect with the concepts and practices of the artwork and are thus part of artwork’s specificity. This means that these artworks are not objects or even a collection of components that are defined as a final product/artwork. Rather they consist of various building blocks that can be combined, composed, and compiled in different ways, at different times and locations (online and offline) and by different people. In other words, the artworks are not necessarily the consequence of a straightforward procedure that leads to specific results. Since the process of creation and (re)creation is heterogeneous and involves a certain level of improvisation that continually re-negotiates its structure the documentation archive should ideally reflect this approach, while also enabling a revisioning of past iterations and information. Whereas most content management systems are built around a relational database that can easily link different types of information to achieve our goals we focused on version control systems that would allow us to revisit the history of changes. Moreover, as we were interested in looking at the potential of collaborative workflows we decided to focus on version control systems that also made it possible to work at the same time from different locations.

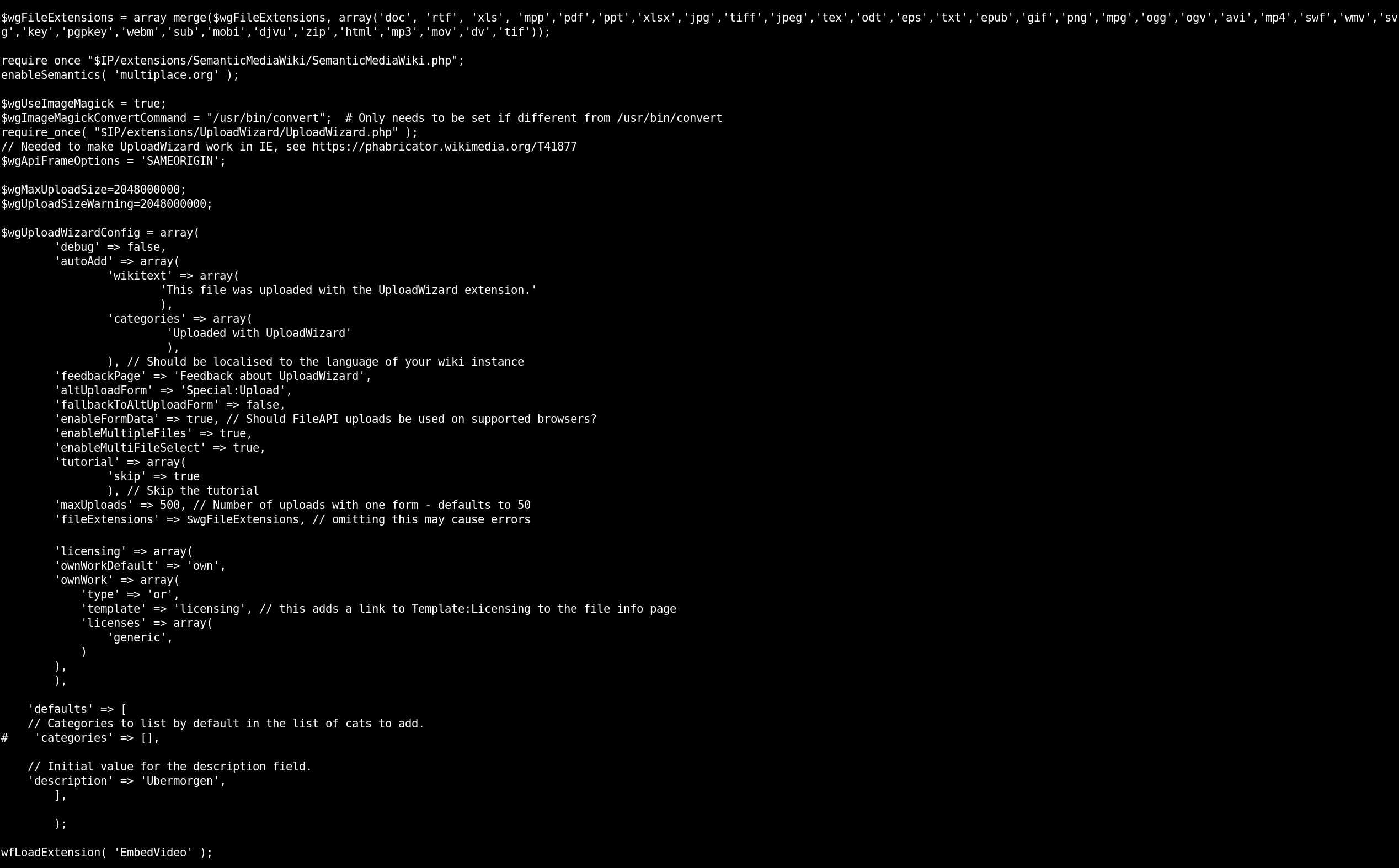

Version control system

Finding a coherent and structured way to organise and control revisions has always been a core of archival practices. In the era of computing, these fundamentals became even more urgent, and complex, and engendered the production of version control systems (VCS). Version control systems check the differences between versions of code or text. By archiving through an agency of timestamp and the name of the author, and by making ongoing versions of a project available, VCS allows multiple people to work on elements of a project without overwriting someone else’s text. Changes that are made can easily be compared, restored, or, in some cases, merged. Version control systems are also “a necessary archival allowance for the arts due to the frequency of "memory-intensive files and variations on those files' within the evolution of artistic artworks.” (de Vries, 89) Expanding on de Vries,’ this study focuses on how distributed version control systems further collaboration in the archival of digital art, which was chosen to develop the ability for distributed and decentralized platforms to supplement collections management databases in, specifically, contemporary art documentation (i.e. Digital arts documentation)

Local version system.

Wikipedia is perhaps the most well-known example of using version control in its ‘page history’. Using ‘QuickDiff’, which is based on character-by-character analysis, it allows users to check the differences between new and previous versions. The thinking about version control began in the late 1960s (Mansoux 2017: 343), it was in particular used to understand when something goes wrong in a program as a way to trace the bug that caused the problem (Rochkind 1975). Due to lack of performance, especially in relation to speed when applying patches and updates of metadata, the requirement of a more simple design, support for non-linear development (or parallel branching), and the need to have a fully distributed system (Chacon and Straub 2014), induced the development of Git in 2005 . Git is a source code management system, or a file storage system, which makes it possible to write code in a decentralised and distributed way by encouraging branching or working on multiple versions at the same time and across different people. As well, and of particular interest to our research, it facilitates tracking and auditing of changes. In 2007 GitHub started hosting Git repositories (or repos). Interestingly, Git used on GitHub evolved the environment into a site of ‘social coding’ (Fuller et al. 2017). Rather quickly the collaborative coding repositories became used for widely diverse needs: from software development to writing license agreements, sharing Gregorian chants, and announcing a wedding – anything that needs a quick way of sharing and improving information. As mentioned by GitHub founder Tom Preston-Werner: ‘The open, collaborative workflow we have created for software development is so appealing that it's gaining traction for non-software projects that require significant collaboration’ (McMillan, 2013). Whereas Wiki’s VCS is a simple way of version control that is useful for collaborative writing and documentation, Git provides a more comprehensive approach to collaborative working.

Local version system.

Wikipedia is perhaps the most well-known example of using version control in its ‘page history’. Using ‘QuickDiff’, which is based on character-by-character analysis, it allows users to check the differences between new and previous versions. The thinking about version control began in the late 1960s (Mansoux 2017: 343), it was in particular used to understand when something goes wrong in a program as a way to trace the bug that caused the problem (Rochkind 1975). Due to lack of performance, especially in relation to speed when applying patches and updates of metadata, the requirement of a more simple design, support for non-linear development (or parallel branching), and the need to have a fully distributed system (Chacon and Straub 2014), induced the development of Git in 2005 . Git is a source code management system, or a file storage system, which makes it possible to write code in a decentralised and distributed way by encouraging branching or working on multiple versions at the same time and across different people. As well, and of particular interest to our research, it facilitates tracking and auditing of changes. In 2007 GitHub started hosting Git repositories (or repos). Interestingly, Git used on GitHub evolved the environment into a site of ‘social coding’ (Fuller et al. 2017). Rather quickly the collaborative coding repositories became used for widely diverse needs: from software development to writing license agreements, sharing Gregorian chants, and announcing a wedding – anything that needs a quick way of sharing and improving information. As mentioned by GitHub founder Tom Preston-Werner: ‘The open, collaborative workflow we have created for software development is so appealing that it's gaining traction for non-software projects that require significant collaboration’ (McMillan, 2013). Whereas Wiki’s VCS is a simple way of version control that is useful for collaborative writing and documentation, Git provides a more comprehensive approach to collaborative working.

Case Study: 'Chinese Gold' by UBERMORGEN

To aid this research, a case study was used concerning a project and/or artwork entitled Chinese Gold (2005-present) by the artist duo UBERMORGEN in particular, the research and documentation about the process of digitally archiving the artwork. UBERMORGEN.COM is a well-known artist duo, founded in 1999 by Lizvlx and Hans Bernhard. They developed a series of landmark projects in digital art, including Vote-Auction (2000), a media performance involving a false site where Americans could supposedly put their vote up for auction, and Google Will Eat Itself (GWEI, 2005, in collaboration with Alessandro Ludovico and Paolo Cirio), a project that proposed using Google’s own advertising revenue to buy up every single share in the company. With the project Chinese Gold they investigated the phenomenon of Gold mining within World of Warcraft. The project revolves around a partly fictive research into the socio-economic position of virtual currencies. Chinese Gold spans over a decade and deals with a mix of research, documentation, appropriation, storytelling and remixing. It is constantly evolving, growing and in flux. 2. Initial Data Sets

For this research project, a conventional data set was not used. Instead, our initial data set was the artists’ personal folder archive consisting of the files used for the Chinese Gold project. In order to scale down from the archive's initial size (10GB), we used only the oldest folder of the Chinese Gold archive named "CHINESE_GOLD_2006." The folder "CHINESE_GOLD_2006" consists of 337 files (41 of them hidden files) in 66 directories. It contains multiple versions of the completed artwork as well as duplicate files that have been re-selected by the artists for a variety of purposes, explicit in the naming of the folders: (e.x. “2014_CONTEMPORARY_ISTANBUL_HIGH_RES, IMAGES_FOR_CULTURAS_2008_CATALOGUE, NiMK _exhibition.”) The maximum depth of the file tree is 6 (counting from zero starting at the root folder), for example in the case of "CHINESE_GOLD_2006/IMAGES/IMAGES_WOW_BELGRAD_SERIES/For_Belgien_Collector/source_files/Contrast_pushed_not_good/P1051336_c.jpg". Most of the files are pictures (like .jpg, . png, .tif).

count type of extension

------------------------------

117 jpg

82 png

67 none (folder)

57 tif

41 DS_Store (hidden file)

12 zip

6 html

5 doc

4 mov

3 gif

2 rtf

1 dv

1 iMovieProj

1 iMovieProject

1 mp4

1 odt

1 pdf

1 xls

Aside from the work files, video and images, and the many copies of the works’ files in various sizes and resolution, the folders also contain the research made by the artists for the work. IMAGES contains one folder called SCREENSHOTS which, unlike the 8 other ones, contains image research. The first folder contains photographs of a relevant currency trading Website. The next folders’ show the relationship between the work's name and the visual correspondence that can be established through search engine queries: Chinese gold vs encrypted gold bars, golden coins, old golden objects, and wedding rings. Although these items have not been exploited by the artists for the work's production, this is exemplary of their work process and how they attempted to envision Chinese Culture’s take on “golden digital currency”.

[Insert picture here: DigitalMethods2018 /DMI_WS_2018_Presentation/bar_chart_extensions.png]

[Permalink:  ]

]

]

]Caption: bar chart showing the distribution of types of file extensions by category (Source: own representation, created with RAWgraphs.io)

3. Research Questions

The main questions that we want to answer are:

b. How to effectively integrate VCS in the practice of archiving? To be understood from the perspective of what is being archived, and for what purpose? (preservational, art historical etc.)

Alongside the practical experimentation, and to gain a better understanding of the underlying structures that support these environments we will focus on answering the following questions:

- What is the value of concepts such as provenance, appraisal and selection in GitLab and MediaWiki?

- What is the function of metadata in these systems?

- How stable and secure is the data in a version controlled archive?

- Would these systems enable the reorganisation of the archive's structure through time? (if the documents status changed, ex: documentation vs artwork)

4. Methodology

Using the files in "CHINESE_GOLD_2006," we aimed to answer our research questions through a practice-based investigation of the VCS affordances in GitLab and MediaWiki . The first step in this collaborative effort was the need to upload the “CHINESE_GOLD_2006” folder to both the GitLab and MediaWiki platform. This course of action engendered a concrete understanding of what we can or cannot do on the system, the information that we can collect on the archive's alterations in time, and how we can preserve traces of the initial archive structure in the two VCSs. As such, the methodology will now be further divided into two parts: 1) GitLab and 2) MediaWiki.

Git(Lab)

Git is a ‘source code management’ (SCM) storage system that encourages developers to duplicate, track, integrate, and merge code repositories throughout multiple versions of the same project. Git also provides its users with the ability to trace and track the work in progress. (Fuller, 87) Within the Git, there exists a variety of hosting systems/sites such as GitHub, GitLab, BitBucket, and more. Prior to the start of this project, the GitHub was the chosen platform to compare to MediaWiki, specifically comparing each platform's given affordances with concerns to the collaborative effort of archiving Chinese Gold (2006 - ) by UBERMORGEN. After re-evaluating the features unique to GitHub and comparing them to those of GitLab, we concluded the latter platform was more suitable to the needs of this specific case study.GitLab, a web-based repository manager, open source alternative to GitHub, simplifies the process(es) of Git itself and provides additional functions to support collaborative development (e.g. wiki). Some of these additional functions include unlimited, free shared runners for public projects, built-in Continuous Integration/Continuous Delivery (CI/CD), promotion of innersourcing of internal repositories, the designing, tracking, and managing of project 'milestones,' exporting one's project to other systems, and the creation of new branches from an issue. Moreover, the GitHub features of the repository and/or branch permissions, as well as the performance of editing from upstream maintainers in a branch is less than ideal for collaborative digital archiving projects: the need for repository administrators to approve each push to the repository and/or branch fosters a slower evolution of collaborative archival projects. GitLab, a web-based repository manager, open source alternative to GitHub and is one of the largest dynamic repositories of software online. GitLab simplifies the process(es) of Git itself and provides additional functions to support collaborative development (e.g. Git wiki). Some of these additional functions include unlimited, free shared runners for public projects, built-in Continuous Integration/Continuous Delivery (CI/CD), promotion of innersourcing of internal repositories, the designing, tracking, and managing of project 'milestones,' exporting one's project to other systems, and the creation of new branches from an issue. Moreover, the GitHub features of repository and/or branch permissions, as well as the performance of editing from upstream maintainers in branch is not ideal for collaborative digital archiving projects: the need for repository administrators to approve each push to the repository and/or branch fosters a slower evolution of collaborative archival projects.- -> the need to co-ordinate across often increasingly large-scale projects or, the need to develop project requirements as the system develops. (89)

- -> version control as a necessary archival allowance for the arts due to the frequency of "memory-intesive files and variations on those files' within the evolution of artistic artworks (89)

- -->distributed vs. centralized

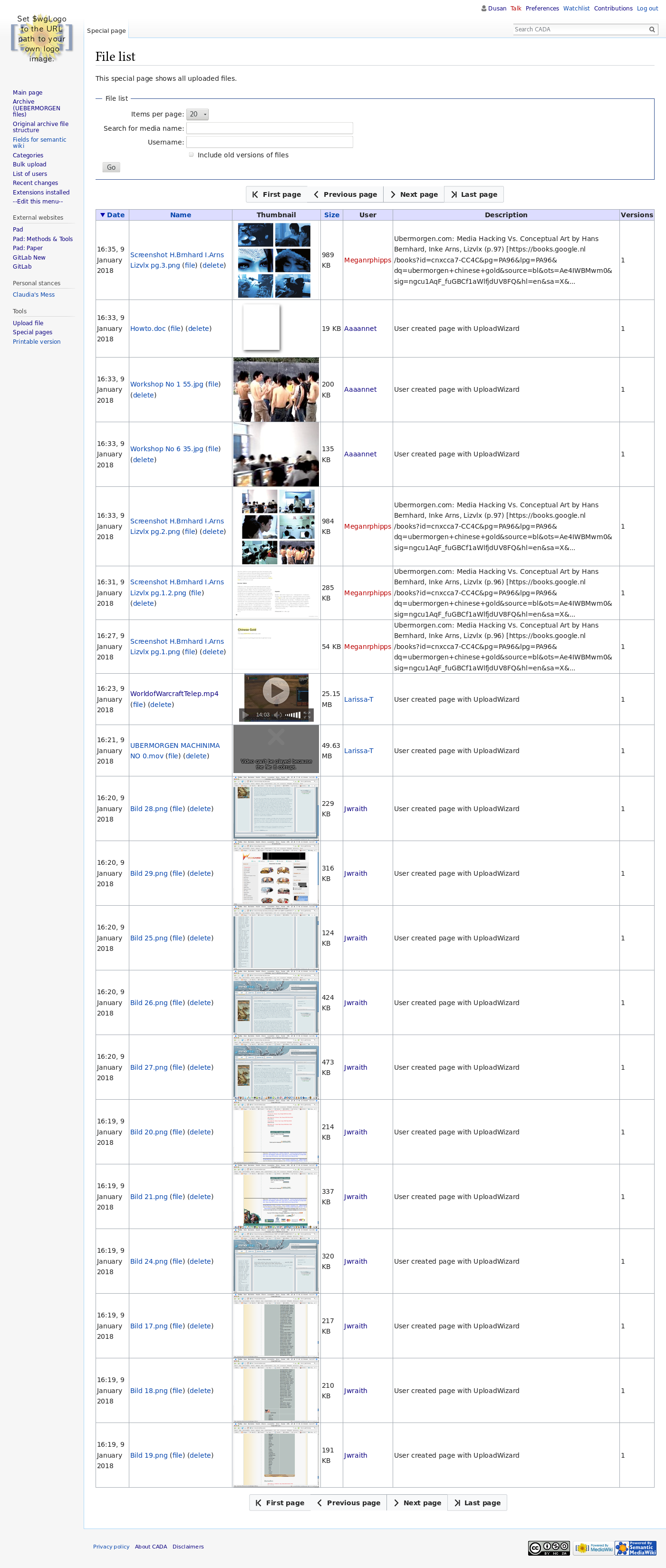

MediaWiki

MediaWiki is a free and open-source software that runs a website, which allows users to create and collaboratively modify pages or entries via a web browser. This platform is developed continually by the community around Wikimedia Foundation, the operator of Wikipedia. Its core functionality can be extended by hundreds of extensions for a file, data and user management, metadata, layout, and so on. Wiki also exists within Gitlab. It uses markdown language, in contrast to MediaWiki, which uses its own syntax. MediaWiki is much more sophisticated than the Gitlab wiki. In particular, MediaWiki allows to categorize and semantic tag pages.For MediaWiki, two different pages were set up, each with their own view on how to use the platform for archival purposes. The first page, called the Belgrade Series, aimed to find a basis in which the files from the “IMAGES\IMAGES_WOW_BELGRAD_SERIES” folder could be structured. Within “IMAGES\IMAGES_WOW_BELGRAD_SERIES” contains six subfolders, where the same set of photos are in different formats and sizes, and for varying purposes. As such, the Belgrade Series page consists of experimental categorization and array of uploaded files that are in various formats. One example of this being the Belgrade Series Section 1.2, entitled ‘REX Exhibition.’ The content in Section 1.2 originally came from the raw archive folder “tif_f_REX_exhibition__45x60_300dpi.” This folder obtained large TIFF files, which looked similar to the web version files. It was presumed that these files were likely used for the REX Exhibition in Belgrad (2007 REX Gallery, Belgrade „Chinese Gold, Amazon Noir & GWEI Slideshow“) Due to the fact that there was no logical order to these TIFF files, a gallery was made for them using MediaWiki. However, in the process of saving the page, the team noticed MediaWiki was not able to display .TIFF files. Consequentially, all of the files that were taken from the “tif_f_REX_exhibition__45x60_300dpi” folder now exists on the MediaWiki as mere hyperlinks that link to each individual file page.

For the NiMK Exhibition page, we chose to take another non-conventional approach: to gather as much information connected to the NiMK Exhibition as possible and display it on MediaWiki. As such, the page includes the artworks that were used, information about the exhibition, documentation about the installation, pictures and videos of the installation, visitors comments, critical reception and other media publications that we could find. Due to this technique, this page became prime for the act of ‘semantic linking’. The SemanticWiki extension allows annotations to be made in a format visually similar to the ‘category’ tag, yet it is far more powerful. This superiority derives from its ability to make direct queries to the database, which all the files and pages are stored within, and then export the database in Resource Description Framework (RDF) and/or Comma-separated Values (CSV). Using the semantics function, we were able to link information on the page to certain properties that can be found on other pages as well for example we have raw facts of the exhibition such as the institution where the works have been exhibited would be formatted as: Held_in_venue::NiMK but also the city where the works have been: Held_in_city::Amsterdam. Such annotations can help query search when the wiki is scaled up or answer questions more easily, questions such as: Which locations/institutions have exhibited Chinese Gold (2006 - )? Finally, we linked all of the documents being connected to the exhibition through semantics: Component_of::NiMK_exhibition|component of ''Chinese Gold'' at NiMK exhibition and Documentation_of::NiMK_exhibition|documentation of ''Chinese Gold'' at NiMK exhibition.

Non-default extensions employed: Upload Wizard, EmbedVideo, Semantic MediaWiki.

Figure 1. File archive on MediaWiki.

5. Finding

When evaluating the affordances of GitLab and MediaWiki, our assessment criteria consisted of 1) Collaboration: How does the platform enable or aid a collaborative workflow? Are these system’s affordances easy to grasp? How complex is the overall use of the system platform? Are there user hierarchies? Is it user-friendly? 2) Storage Management: Is the version control system centralized or distributed? How easy and/or open-sourced is the uploading and retrieval of files? Is there an option for a Large File Storage (LFS) extension? 3) Presentation of Archive: What are the aesthetics pertaining to the system’s platform? How instinctive are the visual affordances? Does the platform’s format give aid to the storage of digital artworks? 4) Overview and/or Visualisation of Chances: What possibilities does the system provide for acquiring descriptions and the tracking of changes? 5) Metadata: How does the system handle metadata and file paths? How extensive is the metadata for a given file? 6) Strengths/ Uniqueness: What can the tool do extremely well? 7) Weakness: What is the tool’s biggest deficiency? What are its weaknesses?

What properties would we like to track (and keep) in an archive?

- provenance of a file (creation date) and other file creation metadata (for instance exif file)

- who uploaded the file to the archive and when and who changed the file

- file structure as uploaded

- Being able to differentiate between description and object of description. Being able to link description and object of description

- Being able to change a text file and image file and compare the changes to a previous version

- Being able to describe (give reasons for) changes

Findings: Git vs. MediaWiki

We found that certain features of the version control systems more prioritized when attempting to archive a digital artlike like that of Chinese Gold (2006 - ). Firstly, the VCS system must have provenance, the creation date, and the creation metadata (ex. EXIF file) for each file. It must provide users with the opportunity to track who uploaded individual files. Successively, When changes are made to a file, the system should have an easily accessible way to track the user who made the change, as well as when (date and time).the change was made. The file should also be accessible to users in the format structure it was originally uploaded also. The VCS system must have the capacity to differentiate between description and object of description, and the capacity to link them together tool. It should be able to change a text file to an image file, and vice versa, and be able to compare any such changes to a file and its previous versions. Lastly, when users make changes to a file, the VCS provides the option to describe and/or give reason(s) to the changes made, a feature particularly crucial for long-term conservatory and archival digital art projects (i.e institutions).

When exploring the technical affordances of each system, we naturally discovered downsides specific to the platform and ran into certain logistical issues. With regards to GitLab, tagging workflow was tedious and difficult and the system also exhibited a degree of user hierarchy: the structure of GitLab maintains the possibility for future users the need for an explanation of the repository from past and/or current top administrators that accessing a particular repository. This structural issue along with the platform’s format aesthetics, enables the sectioning, formulating, and/or interpretation of an artwork to be significantly influenced by one, or a select few of, individual(s). These restrictions would be non-beneficial particularly to the role of the conservator, compared to the curator. The downfalls for MediaWiki include the inability of keeping a Master file, it is only accessible online, and the concreteness of the demarcation of files. This latter notion being particularly undesirable for the archival of ongoing and open-ended digital artworks or projects, such as Chinese Gold (2006 -) by UBERMORGEN.

MediaWiki as an archiving tool:

- Collaboration: Administrator assigns editing rights to the users. There are many different levels of editing right (of what the user is allowed to edit, for instance, edit pages, delete pages, edit sidebar https://www.mediawiki.org/wiki/Manual:User_rights ). MediaWiki also has discussion tabs for each page (content centred). The creation of categories and semantic tags has to be organised, otherwise, it becomes chaotic.

- Storage Management: all data is stored on one central server. In the context of preservation, this is less desirable than the distributed system used by git.

- Files can be bulk uploaded, but in a flat list structure (folder hierarchies are not kept). How can they be downloaded in a bulk? (through API)

- Also possible w/ webcrawler?

- Presentation of archive: The user sees the most current version. There are no forks or splitting up of projects.

- Does track changes, but comparability is somewhat opaque - textual chages are easily compared (similar to diff, less accessible in the case of binaries.)

MediaWiki has a flat structure (pages linked amongst each other, no hierarchy) --> find a way to keep file paths of the stored objects --> and also maintain some of the functionality of the folder organization (or indeed improve on it) through use of e.g. Semantic MediaWiki extension, that allows for the application of a range of properties to a given item

there is a limited range of file formats that can be rendered in MediaWiki. This range can get extended with MediaWiki extensions. CHINESE GOLD's file formats are quite ideal in that sense, that they are compressed and common. MediaWiki allows to bulkupload files. It automatically lists all the files including a preview (EmbedVideo extension is required for non-W3C native video formats such as MP4) and image metadata, but it does not keep the hierarchical folder structure. MediaWiki allows to add context data.

What we did with wiki:

for the wiki we set up two different pages each with their own view on how to use wiki for archival purposes:

- Belgrade Series (https://multiplace.org/cada/index.php/Belgrade_Series)

- for the Belgrade Series page we looked for the basis to the file structure of IMAGES\IMAGES_WOW_BELGRAD_SERIES folder in the folder there are six subfolders where the same set of photos are in different formats and sizes for different purposes. So on the Belgrade Series page, there is the following content:

- 1 Photo series

- 1.1 Used on the web -> these images came after some digging from Hans's own personal website, there was some text on it as well so we copied the text and added along with a screenshot of the webpage followed by an ordered gallery of the pictures that were in the Webversion folder. Within the folder, there was another subfolder called not perfect with 2 images in there that were in the same format as the Webversion picture so we included under this subheader

- 1.2 REX Exhibition -> there was a folder called tif_f_REX_exhibition__45x60_300dpi which had larger TIFF files that looked similar to the webversion files these files were probably used for the REX Exhibition in Belgrad (2007 REX Gallery, Belgrade „Chinese Gold, Amazon Noir & GWEI Slideshow“) We haven't looked into the details of this exhibition so more information on how what why we cannot add at the moment. There was no logical order of the TIFF files so we created a gallery within the wiki with those files however while saving the page we noticed that wiki cannot display.TIFF files so now they are all hyperlinks linking to each individual file page

- 1.3 Originals -> There was one folder called originals with pictures in there with no particular structure logical these were the pictures the original in the series. We added these in the gallery in the same order as the webversion pictures so it can be easier to compare them especially as some picture within the gallery look very similar

- 1.4 For collector -> There is one folder named For_Belgien_Collector which we thought that here files have been specially prepared for a Belgian collector, however, we know that the artists don't even remember who this was. We did not yet have time to put these files up

- For the NiMK exhibition page we to took a different approach here we wanted to gather as much information that was connected to this particular Exhibition because of this, this page has been perfect for the use of semantic linking.

- SemanticWiki extension allows annotations to be made within the MediaWiki. The format looks similar to the category tag however it is much more powerful as it can be used to make direct queries to the database where all files and pages are stored and it can also be exported in RDF and CSV.

- using the semantics we can link information on the page to certain properties that can be found on other pages as well, for example, we have raw facts of the exhibition such as the institution where the works have been exhibited would be formatted as: Held_in_venue::NiMK but also the city where the works have been: Held_in_city::Amsterdam. Such annotations can help query search when the wiki is scaled up, questions that could be answered relatively easy with the semantics are for examples in which institutions have the Chinese Gold series been exhibited or even a particular work. Or when given the City; find all artwork that has been in Amsterdam or all exhibitions. Semantics can be really powerful to create specific overviews however the implementation of it needs to be done carefully, consistently and thoughtfully as the annotations you are creating are for potential future uses by unknown users. Annotating every word is not useful it would create too many field names. A possible solution to this is to create beforehand on the basis of a controlled vocabulary a set list of field names to use and spread that under all the users. Using SemanticWiki requires you to carefully deliberate how you are going to organise your wiki, we would recommend making an architecture of what you would like to be searched before you start annotating.

- The structure of the page is also very important as we wanted to strive to a consistent format it stopped us and really made us think what would we want to describe and show on this page. Again Wiki forces you to stop and think before you act. We decided on this page to grab everything that is connected to this particular exhibition and display that on this wiki. We included the artworks that were used, information about the exhibition, documentation about the installation, pictures and videos of the installation, visitors comments, critical reception and other media publications that we could find.

- We linked all documents connected to the exhibition through semantics: Component_of::NiMK_exhibition|component of ''Chinese Gold'' at NiMK exhibition and Documentation_of::NiMK_exhibition|documentation of ''Chinese Gold'' at NiMK exhibition

6. Discussion

By the end of this research session, and along the lines of the criteria given above, we found that each platform served as an optimal VCS in the archival of but for two separate different fields. The GitLab being more task-oriented is then m ore accessible for the curator. O n the other hand, MediaWiki was found to be more suitable to representation and more content-oriented, deeming the platform as one being m ore accessible to the conservator ( and/or researcher) .

Compared to MediaWiki, GitLab was more technical and demanded a less forced organization and categorization of the data. GitLab could be accessed offline, particularly after pulling down the files from the local repository on to one’s own personal computer. After which, allowing the possibility to branch via one’s own folders and hence attractively malleable to the curator. GitLab also provides the user to see the size of the archive after having cloned the repository onto their own personal computer. Thus, within a conservatory framework, the presentable and explicative nature of MediaWiki serves as the ideal platform for collaborative archiving of digital art. Yet, if considered from a curatorial perspective, GitLab is the far more optimal choice. Since these disciplines often overlap, rather than using both simultaneously, a system that merged both platforms together would be the ideal VCS for the collaborative archival of digital art.

7. Conclusions

In 'Moving Beyond the Artefact,' William Uricchio argues that the computerized archive allows researchers and/or historians the opportunity to "reflect on the birth and development of the latest 'new' medium" (Uricchio, 135). Digital repositories impose spatial and resourceful limits on the conservation of digital artworks, inciting/prompting the demarcation of guidelines concerning valuable vs. invaluable files. In turn, the historian/conserver adapt the role of an active social agent in the sustenance of contemporary hierarchies of social and cultural power. By using open-source, distributed storage systems for the archival of digital art, the conservator gives rise to a cultural shift towards participation, collaboration, and the democratization of the author. The prepubescent stage of development of the numerous and varying version control systems available to conservators brings forth reflection on each platform's technical affordances and how advantageous such affordances are to this cultural shift. The comparison of GitLab and MediaWiki, regarding their level of fostering the rise of participatory and/or democratized collaborating archiving of digital art, is our contribution to this long-term disciplinary reflection.

The archival of digital art by means of VCS implies a merging of the role of the (media) artist with the conserver. Through this process, the conserver partly shapes the future representation and/or meaning of the artwork. This is why there is a need for collaboration in the long-term and open-ended archival process should be thoughtfully considered by programmers, artists, and conservators alike. As Uricchio poses, this consideration should include questions such as "What evaluative criteria should we deploy? And even if we could answer these questions, what would be an appropriate point to ‘freeze’ and hold these dynamic artefacts in the archive? Where do we draw the line between the cultural and the social, between the artefact and the means of its production? And even if we accept the social and acknowledge that patterns of interaction are as important as the text, how do we go about documenting them in meaningful ways?" (Uricchio, 137)

Considering that a sizeable portion of the content used for ‘Chinese Gold’ (2006) has been reappropriated from other uncited sources, a collaborative and granular continuation would allow the files to remain in the same media-scattered and open-sourced form in which the artists found them. Archiving digital artworks by way of a granular, open-sourced versioning system thus suggests a continuation of UBERMORGEN's artistic philosophy and/or intent well beyond their lifespans: a philosophy respecting the democratization of cultural knowledge, an absence of intellectual property rights, prioritization of context over concept, and thus eliminating the need for an established or finalized conceptual direction to artworks. This continuation would then imply Chinese Gold (2006 - ) as being an artwork without an author, with no limitations, with no beginning and with no end. Engendering the democratization of raw media files, through the encouragement of collaborative efforts, may incite in more of the masses the habit of contextual critical reflection.

8. References

- Chacon, Scott and Ben Straub. 2014. Pro Git. Everything You Need to Know About Git. New York, NJ: Apress. https://git-scm.com

- Dekker, Annet. 2018. Collecting and Conserving Net Art. London/New York: Routledge.

- de Vries,

- Ernst, Wolfgang. 2009. "Underway to the Dual System: Classical Archives and/or Digital Memory" in Daniels and Reisinger, eds., Netpioneers 1.0, pp. 81-99.

- Fuller, M., Goffey, A., Mackenzie, A., Mills, R. and Sharples, S. 2017. “Big Diff, Granularity, Incoherence, and Production,” In Blom, I. Lundemo, T. and Røssaak, E. (eds.) Memory in Motion: Archives, Technology, and the Social. Amsterdam: Amsterdam University Press, 87-102.

- Mansoux, A. 2017. "Fork the System", chapter 8.2 in Mansoux, Sandbox Culture. London: Goldsmiths University. PhD dissertation. Available online: https://www.bleu255.com/~aymeric/dump/aymeric_mansoux-sandbox_culture_phd_thesis-2017.pdf

- Rochkind, Marc J. 1975. “The Source Code Control System,” IEEE Transactions on Software Engineering. Vol. 1, no. 4, pp. 364-70

-

Uricchio,William.“Moving Beyond the Artefact:”Digital Material: Tracing New Media in Everyday Life and Technology, MediaMatters, Amsterdam University Press, 2009.

Presentation slides

Slides: Collaborative Archiving Digital Art.pdf | I | Attachment | Action | Size | Date | Who | Comment |

|---|---|---|---|---|---|---|

| |

Collaborative Archiving Digital Art.pdf | manage | 1 MB | 31 Jan 2018 - 11:44 | LarissaTijsterman |

Edit | Attach | Print version | History: r11 < r10 < r9 < r8 | Backlinks | View wiki text | Edit wiki text | More topic actions

Topic revision: r11 - 04 Mar 2018, MeganPhipps

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors.

Copyright © by the contributing authors. All material on this collaboration platform is the property of the contributing authors. Ideas, requests, problems regarding Foswiki? Send feedback